Abstract

One of the main problems studied in statistics is the fitting of models. Ideally, we would like to explain a large dataset with as few parameters as possible. There have been numerous attempts at automatizing this process. Most notably, the Least Angle Regression algorithm, or LARS, is a computationally efficient algorithm that ranks the covariates of a linear model. The algorithm is further extended to a class of distributions in the generalized linear model by using properties of the manifold of exponential families as dually flat manifolds. However this extension assumes that the normalizing constant of the joint distribution of observations is easy to compute. This is often not the case, for example the normalizing constant may contain a complicated integral. We circumvent this issue if the normalizing constant satisfies a holonomic system, a system of linear partial differential equations with a finite-dimensional space of solutions. In this paper we present a modification of the holonomic gradient method and add it to the extended LARS algorithm. We call this the holonomic extended least angle regression algorithm, or HELARS. The algorithm was implemented using the statistical software R, and was tested with real and simulated datasets.

Similar content being viewed by others

1 Introduction

In model selection, one would ideally want to choose a statistical model that fits the data well, while still being simple enough to allow meaningful interpretations and explanatory power. In this paper we consider model simplification of linear and generalized linear models, where we want to choose a subset of covariates to include in the model.

In two decades, there have been many advances in sparse modeling. One of the most famous methods is L1-regularization: Least Absolute Shrinkage and Selection Operator (LASSO [23]). LASSO is defined only for the normal linear regression problem. However, the idea of LASSO has been applied to many other problems. For example, Park and Hastie [19] considered the generalized linear models and Yuan and Lin [25] treated Gaussian graphical models. Least Angle Regression (LARS, [6]) is an efficient algorithm for computing the LASSO solution paths. The LARS algorithm is described based on Euclidean geometry because LARS considers the normal linear regression problem. Hirose and Komaki [10] proposed the Extended LARS (ELARS) algorithm based on the information geometry of dually flat spaces. ELARS is an algorithm for estimating and selecting parameters in the generalized linear models. The idea of ELARS was applied to edge selection in the Gaussian graphical models [11] and the contingency table models [12]. Another version of geometrical extensions of LARS was given by Augugliaro, Mineo and Wit [4], and a geometrical approach to sparse modeling was also proposed in [26].

The ELARS algorithm by Hirose and Komaki [10] has a computational drawback in that it assumes that the potential function (i.e. the normalizing constant) of the underlying probability distribution function is easy to compute. This is often not the case, which motivates us to use the holonomic gradient method, a computationally efficient method for computing potential functions and their gradients, introduced by Nakayama et al. [17]. There are a few other methods that deal with non-normalized models in the literature. In [13] Hyvärinen proposed a method for estimating parameters of non-normalized models. This method makes use of a 2-local proper scoring rule, which can be computed without knowledge of the normalizing constant [20]. In the case of the ELARS algorithm, such methods are of limited use since we need to know the value of the normalizing constant and its derivatives at several points throughout the algorithm.

A system of linear partial differential equations is called a holonomic system if it has a finite-dimensional space of solutions. Refer to Sect. 3 for a more precise description. If the potential function satisfies a holonomic system, we can use a modification of the holonomic gradient method to keep track of its value at each step of the algorithm and update it when needed in a computationally efficient way. We call the combined algorithm the holonomic extended LARS algorithm, or HELARS.

The main result of the paper is an implementation in R of the HELARS. In one explicit example we consider the normal distribution restricted to positive values as the underlying distribution. This distribution is simple enough as an introductory example due to its similarities with the well-known normal distribution, especially with regards to e- and m-affine coordinate conversions. Despite the truncated normal having no closed form potential function, our implementation of the algorithm does not use numerical integration to compute its value. Of course, the potential function of the truncated normal distribution is nothing but the Gaussian cumulative distribution function, which is implemented as a built-in function in almost all software packages. However, since the truncated normal model is a special case of more complicated models such as the exponential-polynomial distributions [8] and the multivariate truncated normal distributions [14], our result will become a prototype of the overall method.

We will also consider another more complicated example, which we will call the weighted truncated normal distribution. In this example the coordinate conversions become complicated. However, by exploiting the holonomic system we can recover relations between the normalizing constant and its derivatives. Since throughout the algorithm we keep track of the normalizing constant and derivatives, this will allow us to recover any coordinate at any point in the algorithm.

The paper is organized as follows. In Sect. 2 we review basic definitions and results concerning generalized linear models. In Sect. 3 we present the holonomic gradient method. Section 4 discusses the extended LARS algorithm [10], and we look at what necessary changes and additions are needed for the HELARS algorithm. In Sect. 5 we walk through the HELARS algorithm using the two examples mentioned before. We validate the algorithm using both real and simulated datasets in Sect. 6. Finally, we end with a discussion in Sect. 7.

2 Generalized linear models

In this section we will review some foundations of generalized linear models [15]. We will follow [1] in our exposition.

Definition 1

Consider a statistical model \({\mathcal {P}} = \{p_{\varvec{\xi }} \mid \varvec{\xi } \in \Xi \}\), where \(\Xi \subseteq \mathbb {R}^d\). We say that \({\mathcal {P}}\) is an exponential family if for \({\mathbf {y}} = (y_1,\dotsc ,y_n) \in \mathbb {R}^n\), \(\varvec{\xi } = (\xi ^1,\dotsc , \xi ^d)\in \Xi \) we have

for some \({\mathbf {F}} :\mathbb {R}^n \rightarrow \mathbb {R}^d\) and \(C :\mathbb {R}^n \rightarrow \mathbb {R}\), and where \(\psi (\varvec{\xi })\) is the logarithm of the normalizing constant, i.e.

Example 1

The normal distribution is a member of the exponential family. It has the probability density function

Note that here \(C({\mathbf {y}}) = 0\), the natural parameter is \(\varvec{\xi } = \begin{bmatrix} -\frac{1}{2\sigma ^2}&\frac{\mu }{\sigma ^2} \end{bmatrix}^T\), \({\mathbf {F}}( {\mathbf {y}}) = \begin{bmatrix} y^2&y \end{bmatrix}^T\) and \(\psi (\varvec{\xi }) = \frac{(\xi ^2)^2}{4\xi ^1} + \log \sqrt{-\pi /\xi ^1}\).

Definition 2

The Fisher information matrix of a distribution \(p({\mathbf {y}}\mid \varvec{\xi })\) at a point \(\varvec{\xi }\) is a \(d \times d\) matrix \({\mathbf {G}}(\varvec{\xi })=(g_{i,j})\) with entries given by

Equivalently, we may write the elements of the Fisher information matrix as

Next we introduce generalized linear models. Assume that we have n independent observations \(y_1,y_2,\dotsc , y_n\). Each observation \(y_i\) is sampled from an exponential family with scalar parameter \(\xi ^i\), which will depend on a covariate vector \(\mathbf {x^i} = (x^i_1, \dotsc , x^i_d)\). The \((n \times d)\) matrix \({\mathbf {X}} = (x^i_j)\) is called the design matrix. We assume that the covariate vector \(\mathbf {x^i}\) influences the distribution of \(y_i\) only via the linear predictor \(\zeta ^i\), defined as

or using matrices \(\varvec{\zeta } := {\mathbf {X}}\varvec{\theta }\), for some vector \(\varvec{\theta } \in \mathbb {R}^d\). We can also add an intercept term by defining a new design matrix \(\tilde{{\mathbf {X}}} := \begin{bmatrix} {\mathbf {1_{n\times 1}}}&{\mathbf {X}} \end{bmatrix}\) so that \(\varvec{\zeta } := \tilde{{\mathbf {X}}}\varvec{\theta },\) for some vector \(\varvec{\theta } = (\theta ^0, \theta ^1, \dotsc , \theta ^d)^T\). We will always use an intercept term throughout this paper.

The final piece of a generalized linear model, the link function \(g(\mu )\), determines in which way the linear predictor influences the distribution by setting

where \(\mu _i\) is the expectation of \(y_i\). The canonical link is the link function g for which \(\xi ^i = \eta ^i\) for all \(i=1,2,\dotsc ,n\).

The combination of an exponential family, design matrix and link function define a generalized linear model (GLM). The model has \(d+1\) parameters \(\theta ^0, \theta ^1,\dotsc ,\theta ^d\) that we want to estimate given the response \({\mathbf {y}}\) and design matrix \({\mathbf {X}}\). Fitting a GLM is usually more delicate than fitting a linear model. The standard goal is to find the parameters \(\theta ^0,\theta ^1,\dotsc ,\theta ^d\) which maximize the (log-)likelihood. The log-likelihood of the joint distribution of n observations is \(L = \sum _{i=1}^n L_i\), where \(L_i = \log p(y_i \mid \xi ^i)\). This is maximized when all the partial derivatives \(\frac{\partial L}{\partial \theta ^k}\) vanish, assuming that the log-likelihood is strictly concave. In general, the partial derivatives will not be linear functions of \(\varvec{\theta }\), so we have to resort to numerical methods to compute the MLE.

One possible iterative method to find the maximum likelihood estimate \(\hat{\varvec{\theta }}\) is the Newton–Raphson method. Note that we will use this method extensively along with the holonomic gradient method in our implementation (see Sect. 5) for both maximum likelihood estimation and other optimization tasks. We start with an initial guess \(\varvec{\theta }_{(0)}\). For each \(k\ge 0\), we approximate the function at the point \(\varvec{\theta }_{(k)}\) with a polynomial of degree 2. Finding the extremum of the approximation is easy, and we set the point reaching the extremum as the next estimate \(\varvec{\theta }_{(k+1)}\).

3 Holonomic gradient method

In this section we will describe the holonomic gradient method (HGM), first proposed by Nakayama et al. [17]. Consider first the “classical” gradient descent algorithm, which is used to find a local minimum of a function \(F :\mathbb {R}^n\rightarrow \mathbb {R}\). Given a starting point (or initial guess) \({\mathbf {x}}^{(0)}\), we know that the value of the function decreases the fastest in the direction opposite to the gradient. In other words, we should choose the next point

for some stepsize \(\gamma _1 > 0\). Given a suitably chosen stepsize \(\gamma _1\), we have \(F({\mathbf {x}}^{(1)}) < F({\mathbf {x}}^{(0)})\). We then iterate

while choosing a suitable stepsize \(\gamma _k > 0\) at each iteration. We can terminate the algorithm when the gradient is small enough (i.e. when we are close to a local minimum), or when a certain number of iterations have elapsed. Details concerning the choice of step size and efficiency of this method will not be discussed here; see for example [3].

The issue with this method is that it requires computing the gradient \(\varvec{\nabla } F({\mathbf {x}}^{(k)})\) at each step. In many statistical applications, the function we want to optimize will be a likelihood function, which will in some cases contain an integral that does not have a closed form expression, and has to be computed using numerical methods.

The holonomic gradient method takes a different approach to function minimization. The main idea is still the same in that we use the same iterative step as in the classical gradient descent in (3).

The difference is how we compute the gradient. We will construct a vector \({\mathbf {Q}}\) and a set of matrices \({\mathbf {P}}_i\) to form a Pfaffian system

The vector \({\mathbf {Q}}\) will be chosen so that the gradient \(\varvec{\nabla } F({\mathbf {x}}^{(k)})\) is easily recoverable, typically \(\varvec{\nabla } F({\mathbf {x}}^{(k)}) = {\mathbf {A}}({\mathbf {x}}^{(k)}){\mathbf {Q}}({\mathbf {x}}^{(k)})\) for some matrix \({\mathbf {A}}\). With the gradient, we can determine the next point \({\mathbf {x}}^{(k+1)}\) using (3). Given \({\mathbf {Q}}({\mathbf {x}}^{(k)})\), the value of \(\mathbf {Q}\) in the previous step, we can compute its value in the next step \(\mathbf {Q}({\mathbf {x}}^{(k+1)})\) by solving the Pfaffian system (4) using standard numerical ODE solvers.

Observe that we can also implement the Newton–Raphson method using the holonomic gradient framework. The update step will be

and we can also recover the Hessian easily from the Pfaffian system, since there is a matrix \({{\mathbf {B}}}({\mathbf {x}}^{(k)})\) such that \(\varvec{{{\,\mathrm{Hess}\,}}}(F)({\mathbf {x}}^{(k)}) = {{\mathbf {B}}}({\mathbf {x}}^{(k)}) {\mathbf {Q}}({\mathbf {x}}^{(k)})\).

3.1 Rings of differential operators

In order to construct Pfaffian systems, we will be looking at certain ideals in a ring of differential operators. Intuitively, it can be thought of as a regular polynomial ring whose “variables” are differential operators \(\partial _1,\dotsc ,\partial _n\), and coefficients are rational functions, i.e. functions of the form \(f(x_1,\dotsc ,x_n)/ g(x_1,\dotsc ,x_n)\), where f, g are both polynomials. The set of all rational functions (with complex coefficients in n variables) is a field denoted by \(\mathbb {C}(x_1,\dotsc ,x_n)\), in contrast to the regular polynomial ring’s \(\mathbb {C}[x_1,\dotsc ,x_n]\).

Definition 3

The ring \(R_n\) of differential operators with rational function coefficients is

Note that the “multiplication” operation inside the ring is actually a composition of operators. Multiplication in this ring satisfies the following commutation rules

Since every element in \(R_n\) is an operator, there is a natural action \(\bullet \) on the set \(C^\infty \) of smooth functions. If \(f(x_1,\dotsc ,x_n) \in C^\infty \), then

We then note that \((\partial _i x_i) \bullet f = (x_i \partial _i + 1) \bullet f\), which motivates the definition of multiplication in the ring.

Because of these commutation rules, when multiplying two elements in \(R_n\), we can always rearrange factors within a term so that the differential operators \(\partial _i\) are on the right of each term, as in Definition 3. For example, \(\partial _1 x_1x_2 \partial _2 = x_1x_2 \partial _1 \partial _2 + x_2 \partial _2\).

Most standard theorems and algorithms from algebraic geometry in the regular (commutative) polynomial ring \(k[x_1,\dotsc , x_n]\) over an arbitrary field k carry over to \(R_n\) with minor modifications. Because of this, and by slight abuse of notation, we will write \(R_n\) as

where the coefficient field is \(\mathbb {C}(x_1,\dotsc ,x_n)\) and the angled brackets around the “variables” \(\partial _i\) emphasize the non-commutativity.

Remark 1

As \(R_n\) is not a commutative ring, we have to distinguish between left and right ideals. In the following and throughout the manuscript, any mention of an ideal will always be a left ideal, following the convention in [9].

In particular, Macaulay’s theorem will be useful in the next section. For \(\alpha \in \mathbb {Z}_{\ge 0}^n\), let \(\partial ^\alpha := \partial _1^{\alpha _1}\partial _2^{\alpha _2} \cdots \partial _n^{\alpha _n}\). Let \(\prec \) be a term orderFootnote 1 on the differential operators \(\partial _i\). Let \(f = \sum _\alpha c_\alpha \partial ^\alpha \in R_n\). The leading term of f is the term \({{\,\mathrm{LT}\,}}(f) = c_{\alpha '}\partial ^{\alpha '}\) such that \(\partial ^{\alpha } \prec \partial ^{\alpha '}\) for all \(\alpha \ne \alpha '\) such that \(c_\alpha \ne 0\). For an ideal \(I \subset R_n\), we define \({{\,\mathrm{LT}\,}}(I)\) as the ideal generated by all leading terms in I. We note that in general \({{\,\mathrm{LT}\,}}(I)\) is not a subset of I nor is \({{\,\mathrm{LT}\,}}(I)\) generated by the leading terms of the generators of I.

Theorem 1

(Macaulay’s theorem) Let \(I\subset R_n\) be an ideal. The set of standard monomials

is a basis of \(R_n/I\) as a vector space over \(R_n\).

We say an ideal \(I\subseteq R_n\) is 0-dimensional if there are finitely many standard monomials. A necessary and sufficient condition for I to be 0-dimensional is that for all i the following holds

For more details on computations and algorithms in rings of differential operators, see [9].

Remark 2

Instead of the ring \(R_n\), many algorithms actually use the Weyl algebra \(D_n := \mathbb {C}\langle x_1,\dotsc , x_n, \partial _1, \dotsc , \partial _n \rangle \), which consists of elements of the form \(\sum _\alpha c_\alpha \partial ^\alpha \), where the \(c_\alpha \) are polynomials, as opposed to rational functions. In \(D_n\), the counterpart of a zero dimensional ideal of \(R_n\) is called a holonomic ideal. There is a natural homomorphism \(D_n \hookrightarrow R_n\), which gives the following connection: the extension of a holonomic ideal is zero dimensional, and the restriction of a zero dimensional ideal is holonomic. For the sake of this manuscript, the aforementioned connection will suffice; for a more complete treatment of holonomic ideals, see [9].

3.2 Pfaffian systems

Let \(f(x_1,\dotsc ,x_n) \in C^\infty \) be a function, and \(\ell \in R_n\). When \(\ell \bullet f = 0\), we say that f is annihilated by \(\ell \). We say that f is annihilated by an ideal \(I \subset R_n\) if f is annihilated by all \(\ell \in I\). Observe that if \(I = \langle \ell _1,\dotsc ,\ell _s \rangle \), f is annihilated by I if and only if f is annihilated by all \(\ell _i\). Assume that I is 0-dimensional, and let \(s_1 = 1,s_2,\dotsc ,s_r\) be the standard monomials, which are the \(\mathbb {C}(x_1,\dotsc ,x_n)\)-vector space generators of \(R_n/I\). For all \(1\le i \le n\) and \(1\le j \le r\), we can look at the image of the operator \(\partial _i s_j\) in the quotient \(R_n/I\) (under the canonical map \(p \mapsto p+I\)), and write it as a \(\mathbb {C}(x_1,\dotsc ,x_n)\)-linear combination of the standard monomials

where \(p^i_{jk} \in \mathbb {C}(x_1,\dotsc ,x_n)\) for all \(1 \le i \le n\) and \(1 \le j,k \le r\). Thus, if we define the vector \({\mathbf {S}} = (s_1, s_2, \dotsc , s_r)^T\), then for each i, there is a matrix \({\mathbf {P}}_i\) with entries in \(\mathbb {C}(x_1,\dotsc ,x_n)\) such that

with \(({\mathbf {P}}_i)_{jk} = p^i_{jk}\).

Define the vector \({\mathbf {Q}} = (s_1 \bullet f, s_2 \bullet f, \dotsc , s_r \bullet f)^T\). Since f is annihilated by all elements in I, equation (6) is true when we replace \({\mathbf {S}}\) by \(\mathbf {Q}\). We get the following system of differential equations

called the Pfaffian system. Because we chose \(s_1 = 1\), the gradient of f can be recovered from the first elements of each equation in the Pfaffian system

By following the procedure above, one can construct a Pfaffian system given a 0-dimensional (or holonomic) ideal annihilating our function f. This is indeed desirable, since finding a 0-dimensional annihilating ideal is often easier than finding a Pfaffian system from scratch. One noteworthy fact is that if f has a holonomic annihilating ideal in \(D_n\), then its integral over one variable \(\int f \, {\mathrm {d}}x_i\) also has a holonomic annihilating ideal in \(D_{n-1}\). This is extremely useful when using the holonomic gradient method, since the normalizing constant will usually contain an integral. Oaku [18] describes an algorithm for computing the (holonomic) annihilating ideal of an integral.

4 Holonomic extended LARS

In this section, we will describe the holonomic extended least angle regression algorithm. We will also compute explicit forms for the Fisher information matrix, divergence, and coordinate conversion functions between the e-affine and m-affine coordinates.

Consider a set of observed data \(\{y_a, \mathbf {x^a}=(x^a_1,\dotsc ,x^a_d) \mid a=1,2,\dotsc ,n\}\), where \(\mathbf {y} = (y_1,\dotsc ,y_n)^T\) is the response vector, and \({\mathbf {X}} = (x^a_j)\) is the design matrix, which has dimensions \((n \times d)\). We will also add an intercept term to the model, so the design matrix becomes \(\tilde{\mathbf {X}} = \begin{bmatrix} {\mathbf {1_{n\times 1}}}&{\mathbf {X}} \end{bmatrix}\). We will consider exponential families of the form

We will define some notation. Let \(\varvec{\xi }\) be the natural parameter, a \(n+r\) sized vector containing elements \(\xi ^i\). We can split \(\varvec{\xi }\) into two subvectors: we call \(\varvec{\xi }'\) the subvector containing the first n elements, i.e. \(\varvec{\xi }' = (\xi ^a)_{a=1}^n\), and we call \(\varvec{\xi }''\) the subvector containing the last r elements, i.e. \(\varvec{\xi }'' = (\xi ^b)_{b=n+1}^{n+r}\). Hence \(\varvec{\xi } = (\varvec{\xi }', \varvec{\xi }'')^T\). The function \(\psi ^*(\varvec{\xi })\) is the potential function of \(\varvec{\xi }\), and it is equal to the logarithm of the normalizing constant of the distribution

In a generalized linear model with canonical link function, the natural parameter is related to linear predictor by \(\varvec{\xi }' = \tilde{{\mathbf {X}}} \varvec{\theta }'\), where \(\varvec{\theta }' = (\theta ^0, \theta ^1, \dotsc , \theta ^d)^T\) is a parameter vector. In addition, as in [10], we require r additional parameters that are equal to \(\varvec{\xi }''\). Hence we can write \(\varvec{ \theta }'' = \varvec{\xi }''\), and define \(\varvec{\theta } = (\varvec{\theta }', \varvec{\theta }'')\). Equation (8) thus becomes

where the potential function of \(\varvec{\theta }\) is \(\psi (\varvec{\theta }) = \psi ^*(\tilde{{\mathbf {X}}} \varvec{\theta }', \varvec{\theta }'')\). Alternatively, define the sufficient statistic

and an \((n+r) \times (d+r+1)\) block-diagonal matrix

where \({\mathbf {0}}_{n\times m}\) and \({\mathbf {I}}_{n\times n}\) are respectively the \((n\times m)\) zero matrix and the \((n\times n)\) identity matrix. Then we have the identities

and the probability density function becomes

We also note that when \({\mathbf {X}}_B\) has full rank and \(\varvec{\xi }\) is (approximately) in the column space, we can recover \(\varvec{\theta }\) using least-squares.

The \(\varvec{\xi }\) coordinate, being the natural parameter of an exponential family, is the e-affine coordinate of the model manifold. The corresponding m-affine coordinate \(\varvec{\mu }\) is the expectation parameter \(\varvec{\mu } = {{\,\mathrm{E}\,}}[{\mathbf {Y}}]\) and its potential function is defined as \(\phi ^*(\varvec{\mu }) = \varvec{\xi } \cdot \varvec{\mu } - \psi ^*(\varvec{\xi })\). The model manifold defined by the coordinates \(\varvec{\theta }\) is a submanifold of the model defined by the \(\varvec{\xi }\) coordinates. The e-affine coordinate of this submanifold is \(\varvec{\theta }\), and there is a dual m-affine coordinate \(\varvec{\eta }\) which is related to \(\varvec{\mu }\) by

Note that the Fisher information matrix of the model in (8) is equal to the Hessian of the potential function \(\psi ^*(\varvec{\xi })\), denoted \({\mathbf {G}}^* = (g^*_{i,j})\). Similarly, denote the Hessian of the potential function of the m-affine coordinates \(\phi ^*(\varvec{\mu })\) as the matrix \({\mathbf {G}}_* = (g_*^{i,j})\). Since \(\varvec{\xi }\) and \(\varvec{\mu }\) are dual coordinates, the matrices \({\mathbf {G}}^*\) and \({\mathbf {G}}_*\) are inverses of each other.

The Hessian \({\mathbf {G}}(\varvec{\theta }) = (g_{i,j})\) of the potential function \(\psi (\varvec{\theta }) (= \psi ^*(\tilde{{\mathbf {X}}}\varvec{\theta }', \varvec{\theta }''))\) can be recovered using the chain rule:

Using the identities in (9) and (10), we see that

Thus (11) becomes

which implies that \({\mathbf {G}} = {\mathbf {X}}_B^T {\mathbf {G}}^*{\mathbf {X}}_B\). We denote elements of its inverse with superscripts: \({\mathbf {G}}^{-1} = (g^{i,j})\). Similar to the previous case, we have

Remark 3

In subsequent sections we will make extensive use of matrix and vector differentiation. The convention in [16] will be used: the shape of \(\frac{\partial \mathbf {f}}{\partial \mathbf {x}}\) depends either on the shape of \(\mathbf {f}\) or the shape of \({\mathbf {x}}^T\). For example, differentiating a scalar by a length n column vector yields a length n row vector

Differentiating a length m column vector by a scalar yields a length m column vector

Finally, differentiating a length m column vector by a length n column vector yields an \((m \times n)\) matrix, the Jacobian.

This notation allows the natural use of the chain rule for derivatives \( \frac{\partial {\mathbf {f}}({\mathbf {g}}({\mathbf {x}}))}{\partial {\mathbf {x}}} = \frac{\partial {\mathbf {f}}({\mathbf {g}}({\mathbf {x}}))}{\partial {\mathbf {g}}({\mathbf {x}})}\frac{\partial {\mathbf {g}}({\mathbf {x}})}{\partial {\mathbf {x}}}\) with the usual matrix multiplication between the two terms on the right hand side. Furthermore, we can express the Hessian of a scalar valued function \(f({\mathbf {x}})\) as \({\mathbf {{{\,\mathrm{Hess}\,}}}}(f) = \frac{\partial ^2 f}{\partial {\mathbf {x}} \partial { \mathbf {x}}^T}\)

4.1 Mixed coordinate conversion

Next, consider a point P on the dually flat manifold

It is characterized by the e- and m-affine coordinates \(\varvec{\theta } = (\theta ^0,\theta ^1,\dotsc ,\theta ^{d+r})\) and \(\varvec{\eta } = (\eta _0,\eta _1,\dotsc ,\eta _{d+r})\). Alternatively, we may use mixed coordinates, i.e. for some \(J \subset \{0,1,\dotsc ,d+r\}\) we represent P as \((\varvec{\eta }_J, \varvec{\theta }^{\bar{J}})\), where \(\varvec{\eta }_J = \{\eta _j \mid j\in J\}\) is the subvector of \(\varvec{\eta }\) containing only elements which have indices in J, and \(\varvec{\theta }^{\bar{J}} = \{\theta ^j \mid j\not \in J\}\) is the subvector of \(\varvec{\theta }\) containing elements with indices not in J.

Let \(J \subseteq \{0,1,2,\dotsc , d+r\}\), \(\bar{J} = \{0,1,2,\dotsc , d+r\} \setminus J\) and let \(P = (\varvec{\eta }_J, \varvec{\theta }^{{\bar{J}}})\) denote a mixed coordinate. Converting mixed coordinates to unmixed coordinates means recovering \(\varvec{\eta }_{{\bar{J}}}\) and \(\varvec{\theta }^J\) given \(\varvec{\eta }_J\) and \(\varvec{\theta }^{{\bar{J}}}\). Let \(\tilde{\varvec{\theta }}(\varvec{\theta }^{J}) = (\varvec{\theta }^J, \varvec{\theta }^{{\bar{J}}})\), a function \(\mathbb {R}^{|J|} \rightarrow \mathbb {R}^{d+r+1}\) obtained by mixing the fixed \(\varvec{\theta }^{\bar{J}}\) and the unknown \(\varvec{\theta }^J\) coordinates in the positions defined by J. Thus, the function \(\tilde{\varvec{\theta }}\) outputs the full \(\varvec{\theta }\) coordinates, where \(\varvec{\theta }^{{\bar{J}}}\) are always constant, and \(\varvec{\theta }^J\) are allowed to vary. Similarly, let \(\tilde{\varvec{\eta }}(\varvec{\eta }_{{\bar{J}}}) = (\varvec{\eta }_J, \varvec{\eta }_{{\bar{J}}})\) be the same function for the \(\varvec{\eta }\) coordinates.

Depending on the implementation, we will have two alternative methods to compute the mixed-to-unmixed coordinate conversion. If a closed form expression for the conversion from \(\varvec{\mu }\) to \(\varvec{\xi }\) (and vice-versa) is available, we can use Newton’s method to find the root of the function

Proposition 1

The Jacobian \({\mathbf {{{\,\mathrm{Jac}\,}}}}(F)\) of F in (13), has columns

where \({\mathbf {G}}(\tilde{\theta })_i\) is the ith column of \({\mathbf {G}}(\tilde{\theta }) = \left. \frac{\partial ^2 \psi (\varvec{\theta })}{\partial \varvec{\theta } \partial \varvec{\theta }^T} \right| _{\varvec{\theta } = \tilde{\varvec{\theta }}}\) (see (12)), and the vector \({\mathbf {e}}_i\) is the ith standard basis of \(\mathbb {R}^{n+d+1}\).

Proof

Let \(i\in {\overline{J}}\). Then

Since the ith element of \(\tilde{\varvec{\eta }}(\varvec{\eta }_{{\bar{J}}})\) is simply \(\eta _i\) and none of the other elements depend on \(\eta _i\), we have \(\frac{\partial F}{\partial \eta _i} = {\mathbf {e}}_i\).

Next let \(i\in J\). Using the chain rule we get

\(\square \)

Newton’s method will iteratively output a vector \((\varvec{\theta }^J,\varvec{\eta }_{{\bar{J}}})\) with the following update step

where the Jacobian and \(\mathbf {F}\) are evaluated at \(({\varvec{\theta }}^J,{\varvec{\eta }}_{{\bar{J}}})^{(k)}\). Given a suitable initial guess, the method converges very quickly.

In some cases (such as Example 3), there is no obvious closed form expression for the conversion from \(\varvec{\mu }\) to \(\varvec{\xi }\), which is required to compute the function F. In these cases we will exploit the Pfaffian system to recover \(\varvec{\xi }\) from the vector \(\mathbf {Q}\) in equation (7). Details for this case will be deferred to Sect. 5.2.

4.2 Extended least angle regression algorithm

We describe shortly the algorithm by Hirose and Komaki [10]. Let I be the set containing the indices of covariates present in the model. We first start with the model containing all covariates, that is \(I=\{1,2,\dotsc ,d\}\), and compute a suitable initial value. In the examples in this manuscript and the pseudo-code below, we use the maximum likelihood estimate \(\hat{\varvec{\theta }}_{\mathrm {MLE}}\) as the initial point. Thus we will require that \(n \ge d\). In addition, we also compute the maximum likelihood estimate \(\hat{\varvec{\theta }^{\emptyset }}\) of the empty model, i.e. the model where \(\theta ^1,\dotsc ,\theta ^d = 0\). The corresponding \(\eta \)-coordinates are denoted \(\hat{\varvec{\eta }^{\emptyset }}\). We will work in the d dimensional submanifold of S

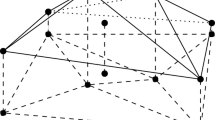

and set \(\hat{\varvec{\theta }}_{(0)} := \hat{\varvec{\theta }}_{\mathrm {MLE}}\) and \(k=1\). The intuitive idea is that we start with all covariates, and sequentially remove one covariate at a time by following the diagonal of a hypercube whose edges are m-geodesics. This will give us a piecewise linear path from \(\hat{\varvec{\theta }}_{\mathrm {MLE}}\) to \(\hat{\varvec{\theta }^{\emptyset }}\), and we will be able to infer the relative importance of the covariates based on the order in which the covariates are eliminated.

For each \(i \in I\), let \(\bar{\varvec{\theta }}_i\) be the m-projection of the current point \(\hat{\varvec{\theta }}_{(k)}\) to the e-flat submanifold corresponding to \(\theta ^i = 0\). Let \(i^*\) be the coordinate which has smallest divergence between the point \(\hat{\varvec{\theta }}_{(k)}\) and its m-projection \(\bar{\varvec{\theta }}_i\), and let this divergence be \(t^*\). Now for each \(i\in I\), look at the m-geodesic connecting \(\hat{\varvec{\theta }}_{(k)}\) and \(\bar{\varvec{\theta }}_i\), and find the point \(\varvec{\theta }^*_i\) along that geodesic that has divergence \(t^*\) from \(\hat{\varvec{\theta }}_{(k)}\). The estimate for the next step \(\hat{\varvec{\theta }}_{(k+1)}\) is constructed as follows: for all \(i\in I\), set the ith coordinate of \(\hat{\varvec{\theta }}_{(k+1)}\) to the ith coordinate of \(\varvec{\theta }^*_i\), and for all \(j\not \in I\), set the jth coordinate of \(\hat{\varvec{\theta }}_{(k+1)}\) to 0. Notice that by construction the \(i^*\)th coordinate will also be 0. We now remove \(i^*\) from the list of “active” covariates I, and restart at the beginning of the paragraph, this time in the submanifold

We quit the algorithm after d steps, when no covariates are left. The divergence function used in the submanifold \(M_I\), which we will denote by \(D^{[I]}\), is the restriction of the KL-divergence on M onto the submanifold. We compute it as follows: denote the coordinates in \(M_I\) as \(\varvec{\theta }_I = (\theta ^i)_{i\in I}\) and \(\varvec{\eta }_I = (\eta _i)_{i\in I}\). The potential functions become \(\psi _I(\varvec{\theta }_I) = \psi (\varvec{\theta }_I,\mathbf {0}_{{\bar{J}}})\) and \(\phi _I(\varvec{\eta }_I) = \varvec{\eta }_I \cdot \varvec{\theta }_I - \psi _I(\varvec{\theta }_I)\). The divergence is then

The algorithm starts from the full model and proceeds step by step towards the empty model, which is the opposite direction compared to the LARS. Other than that, the geometric idea of the algorithm is the same as in LARS: at each step k, we move the current estimate \(\hat{\varvec{\theta }}_{(k)}\) towards the origin, in a direction that bisects the m-geodesics corresponding to each m-projection. We hit the next estimate \(\hat{\varvec{\theta }}_{(k+1)}\) exactly when one of the coordinates \(i\in I\) of the vector \(\hat{\varvec{\theta }}_{(k)}\) hits 0.

The following pseudocode describes the algorithm more precisely. The algorithm is described in the submanifold M of (14), so we do not write down coordinates \(0, d+1, \dotsc , d+r\) explicitly. We input the data (observations and design matrix) and an underlying distribution (essentially the functions \(u_1(\mathbf {y}), \dotsc , u_r(\mathbf {y})\) in (8)), and we get as an output a sequence of estimators \((\hat{\varvec{\theta }}_{(0)}, \dotsc , \hat{\varvec{\theta }}_{(d)})\), where the estimator obtained in the kth step corresponds to a model with k covariates removed.

-

1.

Let \(I = \{1,2,\dotsc , d\}\), \(\hat{\varvec{\theta }}_{(0)} := \hat{\varvec{\theta }}_{\mathrm {MLE}}\), and \(k=0\).

-

2.

For all \(i \in I\), let \(M(i,0,I) = \{\varvec{\theta } \mid \theta ^i = 0, \theta ^j = 0~(j\not \in I)\} = M(I\setminus \{i\})\) and calculate the m-projection \(\overline{\varvec{\theta }}(i,I)\) of \(\hat{\varvec{\theta }}_{(k)}\) on M(i, 0, I).

-

3.

Let \(t^* = \min _{i\in I} D^{[I]}(\hat{\varvec{\theta }}_{(k)}, \overline{\varvec{\theta }}(i,I))\) and \(i^*=\arg \min _{i\in I} D^{[I]}(\hat{\varvec{\theta }}_{(k)}, \overline{\varvec{\theta }}(i,I))\).

-

4.

For every \(\alpha ^i \in \mathbb {R}, i\in I\), let \(M(i,\alpha ^i, I) = \{\theta \mid \theta ^i = \alpha ^i, \theta ^j = 0~(j\not \in I)\}\). For every \(i \in I\), compute \(\alpha ^i\) such that the m-projection \(\overline{\varvec{\theta }}'(i,\alpha ^i,I)\) of \(\hat{\varvec{\theta }}_{(k)}\) on \(M(i, \alpha ^i, I)\) satisfies \(t^* = D^{[I]}(\hat{\varvec{\theta }}_{(k)}, \overline{\varvec{\theta }}'(i,\alpha ^i,I))\).

-

5.

Let \({\hat{\theta }}^i_{(k+1)} = \alpha ^i ~ (i\in I)\) and \({\hat{\theta }}^j_{(k+1)} = 0 ~ (j\not \in I)\).

-

6.

If \(k=d-1\), then go to step 7. If \(k<d-1\), then go to step 2 with \(k := k+1\), \(I := I\setminus \{i^*\}\).

-

7.

Let \(\hat{\varvec{\theta }}_{(d)} = 0\). Output \(\hat{\varvec{\theta }}_{(0)},\dotsc ,\hat{\varvec{\theta }}_{(d)}\) and quit the algorithm.

We can now rank the covariates in order of importance by looking at the output of the algorithm. The zeroth estimator \(\hat{\varvec{\theta }}_{(0)}\) was defined as the maximum likelihood estimate of the full model containing every covariate, and at each subsequent estimator, one of the components will vanish, i.e. the kth estimator \(\hat{\varvec{\theta }}_{(k)}\) will have exactly k of its elements equal to zero. The element that vanishes corresponds to the covariate that is deemed the least impactful at that particular step. Thus by looking at the order in which the covariates vanish in the sequence \(\hat{\varvec{\theta }}_{(0)},\dotsc ,\hat{\varvec{\theta }}_{(d)}\), we can order the covariates from least to most important.

4.3 Adding holonomicity

We will focus our attention to the potential function \(\psi (\varvec{\xi })\) in (8), which can be written as

Whether or not \(\psi ^*(\varvec{\xi })\) has a closed form representation depends on the underlying distribution. We could use numerical integration if no closed form expression for \(\psi ^*(\varvec{\xi })\) exists, but such an approach is computationally inefficient.

Instead, we assume that all observations are independent. Then we can write the potential function as a sum

where \(u_b(\mathbf {y}) = \sum _a u_b^a(y_a)\), and \(f_a(\varvec{\xi }):= \int \exp \left( y \xi ^a + \sum _b u_b^a(y) \xi ^{b+n} \right) \, {\mathrm {d}}y\).

We will further assume that for each \(a = 1,\dotsc , n\), \(b = 1,2, \dotsc , n+r\), the function \(f_a\) has a zero-dimensional annihilating ideal in \(\mathbb {C}({\xi ^a}, {\xi ^{n+1}}, \dotsc , {\xi ^{n+r}})\langle \partial _{\xi ^a}, \partial _{\xi ^{n+1}}, \dotsc , \partial _{\xi ^{n+r}} \rangle \). As was discussed in the end of Sect. 3, such an ideal can be found by using Oaku’s integration algorithm [18], or alternatively, as in Examples 2 and 3, we can also derive the annihilating ideal manually (e.g. finding relations between derivatives using integration by parts).

Following the construction in Sect. 3.2, for each \(a = 1,\dotsc ,n\) we have a Pfaffian system

where \(f_a\) is the first entry of the vector \({\mathbf {Q}}_a\).

The key observation here is that we can now write the gradient and Hessian of the potential function \(\psi ^*(\varvec{\xi })\) using only the entries of all vectors \({\mathbf {Q}}_a\). Hence, throughout the ELARS algorithm, instead of recomputing the \(f_a\) each time using numerical integration, we do it only once at some initial point \(\varvec{\xi }_0\) to construct the vectors \({\mathbf {Q}}_a(\varvec{\xi }_0)\). As the point \(\varvec{\xi }\) changes, we update the vectors \(Q_i\) using the holonomic update procedure described in Sect. 4.3.1.

Example 2

(Truncated normal) We consider a distribution where each observation is independently distributed according to a normal distribution restricted to positive values only. We will call this the truncated normal distribution. We get a potential function of the form

Let \(f_a = \int _0^\infty \exp \left( y\xi ^a + y^2 \xi ^{n+1} \right) \, {\mathrm {d}}y\). We first note that \(\partial _{\xi ^{n+1}} f_a = \partial _{\xi ^n}^2 f_a\), and using integration by parts, we have

which gives the partial differential equation \((\xi ^a + 2\xi ^{n+1}\partial _{\xi ^a})\bullet f_a = -1\). From this we see that

In this example we would choose \({\mathbf {Q}}_a = (f_a, 1)^T\), so that we get the Pfaffian system

We can now compute the gradient of the potential function. Recall that \(\psi ^*(\varvec{\xi }) = \sum _a \log f_a\), so

when \(a = 1,2,\dotsc , n\). Hence if all \({\mathbf {Q}}_a\) are known, the gradient of \(\psi ^*\) can be computed using elementary operations, since \(f_a\) and its derivatives can be recovered from \({\mathbf {Q}}_a\) and the Pfaffian systems.

The Hessian of \(\psi ^*\) can be computed in a similar manner. We have

and we remark that all derivatives and second derivatives of \(f_a\) can be computed from the Pfaffian system.

Example 3

(Weighted truncated normal) Consider a set of n independent observations, where each is distributed according to

when \(y \ge 0\) and for some fixed \(c > 0\) and where \(a = 1,2, \dotsc , n\). The function \(f_a\) is defined as the normalizing constant

We will derive a Pfaffian system for \(f_a\). To simplify notation, we will write \(\partial _a\) instead of \(\frac{\partial }{\partial \xi ^a}\). Note that

so in particular \(\partial _{n+1} f_a = \partial ^2_a f_a\). Integration by parts yields

so \(f_a\) is annihilated by the holonomic ideal \(\langle \partial _{n+1} - \partial _a^2, \frac{2\xi ^{n+1}}{c+1} \partial _a^2 + \frac{\xi ^a}{c+1}\partial _a + 1 \rangle \). If we define the vector \({\mathbf {Q}}_a = (f_a, \partial _a f_a, \partial _a^2 f_a, \partial _a^3 f_a)^T\), we get the Pfaffian system

As in the previous example, we can now compute any derivative of the potential function using the vectors \({\mathbf {Q}}_a\) and matrices \({{\mathbf {P}}}_a^a, {{\mathbf {P}}}_a^{b+1}\).

4.3.1 Holonomic update of the vectors \({\mathbf {Q}}_a\)

Nearly every step of the algorithm requires the knowledge of the vectors \({\mathbf {Q}}_a(\varvec{\xi })\) for all \(a=1,2,\dotsc ,n\) at some point P with coordinates \(\varvec{\xi }\). For example in the case of the truncated normal distribution in Example 2, computing the vectors \({\mathbf {Q}}_a\) would require a total of n separate numerical integrations. Computing \({\mathbf {Q}}_a(\varvec{\xi })\) from scratch at every step is computationally costly. The Pfaffian systems however gives a family of ODE systems that are satisfied by the vectors \({\mathbf {Q}}_a\). Given a point \(\varvec{\xi }_{\mathrm {old}}\) where \({\mathbf {Q}}_a(\varvec{\xi }_{\mathrm {old}})\) are known, we can use standard ODE solvers such as Runge-Kutta to find the value of \({\mathbf {Q}}_a(\varvec{\xi })\) at some other point \(\varvec{\xi }\). In our implementation, we use the R package hgm [22] by Takayama et al., which uses the RK4(5)7 method from Dormand and Prince [5].

We can also find the value of \({\mathbf {Q}}_a\) after a change in \(\varvec{\theta }\) coordinates. Since \(\varvec{\xi } = {\mathbf {X}}_B \varvec{\theta }\) we have

Again, if both \(\varvec{\theta }_{\mathrm {old}}\) and \({\mathbf {Q}}_a(\varvec{\theta }_{\mathrm {old}})\) are known, then we can use numerical ODE solvers to obtain \(\mathbf {Q}_a(\varvec{\theta })\).

There are also cases where we need to conduct the holonomic update step with respect to mixed coordinates. Let \(J \subset \{0, 1, \dotsc , d+r\}\) and assume \(P_\mathrm {old} = (\varvec{\theta }^{\bar{J}}_\mathrm {old}, {\varvec{\eta }_J}_\mathrm {old})\) and \(\mathbf {Q}_a(P_\mathrm {old})\) are known. In order to obtain \(\mathbf {Q}_a(P)\) for some other \(P = (\varvec{\theta }^{{\bar{J}}}, \varvec{\eta }_J)\), we will need to find a Pfaffian system for \(\mathbf {Q}_a\) in terms of the mixed coordinates

Theorem 2

Let \(\emptyset \ne J \subsetneq \{0, 1, \dotsc , d+r\}\) be a nonempty, strict subset and let \(\varvec{\rho } = (\varvec{\theta }^{{\bar{J}}}, \varvec{\eta }_J)\) denote a mixed coordinate. For a vector \(\varvec{\rho }\) with \(d+r+1\) elements, let \(\varvec{\rho }_J\) denote the subvector \((\rho _j)_{j\in J}\), and similarly \(\varvec{\rho }_{{\bar{J}}} = (\rho _j)_{j\not \in J}\). Let

be the function that maps the mixed coordinates \(\varvec{\rho }\) to the \(\varvec{\theta }\) coordinate. Then

where

Furthermore, \(\frac{\partial \mathbf {Q}_a}{\partial \varvec{\rho }}\) is a function of \(\varvec{\rho }\) and \(\mathbf {Q}_a(\varvec{\rho })\).

Proof

We wish to find the derivative of \(\mathbf {Q}_a\) in terms of some mixed coordinates \(\varvec{\rho }\). We will first convert the mixed coordinates \(\varvec{\rho }\) to \(\varvec{\theta }\) coordinates, and then evaluate the derivative. By the chain rule, we obtain the first part of the theorem

By definition, \(\varvec{\rho }_J = \varvec{\eta }_J\) and \(\varvec{\rho }_{{\bar{J}}} = \varvec{\theta }^{{\bar{J}}}\), and the function \(\varvec{\theta }^*\) satisfies the following identities

Let \(i \in {\bar{J}}\) and \(j \in J\). Then clearly

since the components of \(\varvec{\rho }\) do not depend on each other. Likewise, if both \(i,j \in {\bar{J}}\), then

Hence we get \(\frac{\partial \varvec{\theta }^*_{{\bar{J}}}}{\partial \varvec{\rho }_J} = {\mathbf {0}}_{|{\bar{J}}| \times |J|}\) and \(\frac{\partial \varvec{\theta }^*_{{\bar{J}}}}{\partial \varvec{\rho }_{{\bar{J}}}} = {\mathbf {I}}_{{\bar{J}} \times {\bar{J}}}\).

Differentiating both sides of (19) by \(\varvec{\rho }_J\), we have

where the right-hand side becomes \(\frac{\partial \varvec{\eta }_J}{\partial \varvec{\eta }_J} = {\mathbf {I}}\), and the left-hand side becomes

since \(\frac{\partial \varvec{\theta }^*_{{\bar{J}}}}{\partial \varvec{\rho }_J} = {\mathbf {0}}\). Thus

Finally, differentiate both sides of (19) by \(\varvec{\rho }_{{\bar{J}}}\) to get

The right hand side is equal to \(\frac{\partial \varvec{\eta }_J}{\partial \varvec{\theta }^{{\bar{J}}}} = 0\), since once again the elements of \(\varvec{\rho }\) do not depend on each other. The left-hand side becomes

Hence

Finally, \(\frac{\partial \mathbf {Q}_a}{\partial \varvec{\rho }}\) is indeed a function of \(\varvec{\rho }\) and \(\mathbf {Q}_a(\varvec{\rho })\), since \(\frac{\partial \mathbf {Q}_a}{\partial \varvec{\theta }}\), \(\frac{\partial \varvec{\eta }}{\partial \varvec{\theta }} = \frac{\partial ^2 \psi (\varvec{\theta })}{\partial \varvec{\theta } \partial \varvec{\theta }^T}\) is a function of \(\varvec{\rho }\) and \(\mathbf {Q}_a(\varvec{\rho })\)Footnote 2 based on the discussion in the beginning of Sect. 4.3. \(\square \)

4.3.2 Holonomic m-projections

Using the results of Theorem 2 we can now carry out m-projections and recover the vector \(\mathbf {Q}_a\) at the projected point given the value of \(\mathbf {Q}_a\) at the previous point. Let \(\emptyset \ne I \subset \{0,1,\dotsc ,d+r\}\), \(i\not \in I\) and \(\alpha \in \mathbb {R}\). In the algorithm, all of the m-projections will be to the submanifold \(M(i,\alpha ,I) = \{\theta \mid \theta ^i = \alpha , \theta ^j = 0~(j\not \in I)\}\). Let a point P have the dual coordinates \(\varvec{\theta }\) and \(\varvec{\eta }\). The m-projection of P onto \(M(i,\alpha ,I)\) will have the mixed coordinates \(\varvec{\rho } = (\varvec{\theta }^I, \theta ^i = \alpha , \varvec{\eta }_{{\bar{I}} \setminus \{i\}})\). In other words, we first convert the point P to mixed coordinates according to the set \(I \cup \{i\}\) to get \(\varvec{\rho }_0 = (\varvec{\theta }^{I\cup \{i\}}, \varvec{\eta }_{\overline{I\cup \{i\}}})\), and then send the element \(\theta ^i\) to \(\alpha \) to get \(\varvec{\rho }\). Given \(\mathbf {Q}_a(\varvec{\rho }_0)\) (\(= \mathbf {Q}_a(P)\)) and the Pfaffian system, we may now use Theorem 2 to obtain \(\mathbf {Q}_a(\varvec{\rho })\), and thus recover the full \(\varvec{\theta }\) coordinates from the mixed coordinates.

4.4 Holonomic extended LARS algorithm

The holonomic extended LARS algorithm is our main result. The algorithm works exactly as the extended LARS algorithm described in Sect. 4.2, but now we have also to specify a Pfaffian system for the \(\mathbf {Q}_a(\varvec{\xi })\) as an input in addition to the data (response \(\mathbf {y}\) and design matrix \({\mathbf {X}}\)) and the underlying distribution (\(u_1(\mathbf {y}),\dotsc ,u_r(\mathbf {y})\)). Again, we describe the algorithm in the submanifold \(M \subset S\) (see (14)), so we will mostly ignore the coordinates indexed by \(0, d+1, \dotsc , d+r\) in the vectors \(\varvec{\mu }\) and \(\varvec{\theta }\). We will only compute them at the end of step 5, because they are needed for the initial guesses of the numerical solvers.

We get as an output a sequence of estimators \(\hat{\varvec{\theta }}_{(0)},\dotsc ,\hat{\varvec{\theta }}_{(d)}\), where the kth estimator \(\hat{\varvec{\theta }}_{(k)}\) corresponds to a model with \(d-k\) covariates. The holonomic extended LARS algorithm thus looks as follows

-

1.

Let \(I = \{1,2,\dotsc , d\}\), \(\hat{\varvec{\theta }}_{(0)} := \hat{\varvec{\theta }}_{\mathrm {MLE}}\), and \(k=0\). Compute \(\mathbf {Q}_a(\hat{\varvec{\theta }}_{(0)})\) for all \(a = 1,2,\dotsc ,n\).

-

2.

For all \(i \in I\), let \(M(i,0,I) = \{\varvec{\theta } \mid \theta ^i = 0, \theta ^j = 0~(j\not \in I)\} = M(I\setminus \{i\})\) and calculate the holonomic m-projection \(\overline{\varvec{\theta }}(i,I)\) of \(\hat{ \varvec{\theta }}_{(k)}\) on M(i, 0, I) and obtain the vectors \(\mathbf {Q}_a(\overline{\varvec{\theta }}(i,I))\).

-

3.

Let \(t^* = \min _{i\in I} D^{[I]}(\hat{\varvec{\theta }}_{(k)}, \overline{\varvec{\theta }}(i,I))\) and \(i^*=\arg \min _{i\in I} D^{[I]}(\hat{\varvec{\theta }}_{(k)}, \overline{\varvec{\theta }}(i,I))\).

-

4.

For any \(\alpha ^i \in \mathbb {R}, i\in I\), let \(M(i,\alpha ^i, I) = \{\varvec{\theta } \mid \theta ^i = \alpha ^i, \theta ^j = 0~(j\not \in I)\}\). For every \(i \in I\), compute \(\alpha ^i\) such that the m-projection \(\overline{\varvec{\theta }}'(i,\alpha ^i,I)\) of \(\hat{\varvec{\theta }}_{(k)}\) on \(M(i, \alpha ^i, I)\) satisfies \(t^* = D^{[I]}(\hat{\varvec{\theta }}_{(k)}, \overline{\varvec{\theta }}'(i,\alpha ^i,I))\).

-

5.

Let \({\hat{\theta }}^i_{(k+1)} = \alpha ^i ~ (i\in I)\) and \({\hat{\theta }}^j_{(k+1)} = 0 ~ (j\not \in I)\).

The \((k+1)\)th estimate will have mixed coordinates \(\hat{\varvec{\rho }}_{(k+1)} = ({\hat{\eta }}_0^\emptyset , {\hat{\theta }}^1_{(k+1)}, \dotsc , {\hat{\theta }}^d_{(k+1)}, {\hat{\eta }}_{d+1}^\emptyset ,\dotsc ,{\hat{\eta }}_{d+r}^\emptyset )\). Use the holonomic update to compute \(\mathbf {Q}_a(\hat{\varvec{\rho }}_{(k+1)})\) using the value \(\mathbf {Q}_a(\hat{\varvec{\rho }}_{(k)})\), and then use this to obtain the remaining coordinates \(\hat{\theta }_{(k+1)}^0, \hat{\theta }_{(k+1)}^{d+1}, \dotsc , \hat{\theta }_{(k+1)}^{d+r}\).

-

6.

If \(k=d-1\), then go to step 7. If \(k<d-1\), then go to step 2 with \(k := k+1\), \(I := I\setminus \{i^*\}\).

-

7.

Let \(\hat{\varvec{\theta }}_{(d)} = 0\). Output \(\hat{\varvec{\theta }}_{(0)},\dotsc ,\hat{\varvec{\theta }}_{(d)}\) and quit the algorithm.

As in Sect. 4.2, by looking at the order in which the covariates vanish in the sequence \(\hat{\varvec{\theta }}_{(0)},\dotsc ,\hat{\varvec{\theta }}_{(d)}\), we can determine the order of importance of the covariates.

5 Worked out examples

In this section we will work out the implementation of the Holonomic Extended Least Angle Regression algorithm on two examples. The algorithm was implemented in the R programming language [21], and the code can be found in [7].

5.1 Truncated normal

As in Example 2, we consider a model where each observation comes from a normal distribution restricted to positive values. Its probability density function is

where \(y\in (0,\infty )\), \(\mu \in \mathbb {R}\) and \(\sigma \in (0,\infty )\). By expanding, we can write the probability density function as a function of natural parameters

where \(\xi _1 = \frac{\mu }{\sigma ^2}\) and \(\xi _2 = -\frac{1}{2\sigma ^2}\). From the form above we see that the truncated normal distribution belongs to the exponential family. Note also that the normalizing constant \(f(\xi _1, \xi _2) = \int _0^\infty e^{\xi _1 y + \xi _2 y^2} \, {\mathrm {d}}y\) does not have a closed form expression in general, and converges if and only if \(\xi _2 < 0\). A generalization of the truncated normal distribution are the exponential-polynomial distributions, of the form

for \(y>0\) and \(\xi _n < 0\). This family of distributions and their usage with the holonomic gradient method has been studied in Hayakawa and Takemura [8].

We can naturally construct a generalized linear model using the canonical link where each observation is distributed according to the truncated normal distribution. Given a sample \(\mathbf {y} = (y_1,\dotsc ,y_n)\), assume that each \(y_a\) is independent and distributed according to a truncated normal distribution with a unique mean parameter \(\mu _a\) and a common variance parameter \(\sigma ^2\). Hence, using the notation in equation (20) each observation has their own \(\xi _1\) parameter, and \(\xi _2\) is the same in each observation. To make the notation consistent with [10], for each \(a=1,\dotsc ,n\), the “\(\xi _1\) parameter” of observation a will be called \(\xi ^a\) and the common “\(\xi _2\) parameter” will be called \(\xi ^{n+1}\). With this notation, each observation will have the distribution

and since every observation is independent, the joint distribution of \(\mathbf {y}\) is

where \(f_a(\xi ^1, \dotsc , \xi ^{n+1}) := f(\xi ^a, \xi ^{n+1})\).

In the generalized linear model, each observation \(y_i\) is explained by a set of d explanatory variables \(x^i_1, \dotsc , x^i_d\). With the canonical link function in particular, the natural parameter is simply an affine combination of the explanatory variables, i.e. for some real numbers \(\theta ^0, \theta ^1, \dotsc , \theta ^d\), we have \(\xi ^i = \theta ^0 + \theta ^1 x^i_1 + \cdots + \theta ^d x^i_d\).

We will now define some notation. Let \({\mathbf {X}} = (x^i_j)\) be the \((n \times d)\) design matrix, \(\varvec{\theta }' = (\theta ^0, \theta ^1, \dotsc , \theta ^d)^T\) and \(\varvec{\xi }' = (\xi ^1, \dotsc , \xi ^n)^T\). If \(\tilde{{\mathbf {X}}} = \begin{bmatrix} \mathbf {1_n}&{\mathbf {X}} \end{bmatrix}\), where \(\mathbf {1_n}\) is a column vector of size n where each element is 1, then we have \(\varvec{\xi }' = \tilde{{\mathbf {X}}} \varvec{\theta }'\). We can also define a block-diagonal matrix \({\mathbf {X}}_B\) and vector \(\mathbf {Y}\) as

As we defined in Sect. 4, we have \(\theta ^{n+1} = \xi ^{n+1}\). If we set \(\varvec{\theta } = (\theta ^0, \dotsc , \theta ^{d+1})^T\) and \(\varvec{\xi } = (\xi ^1,\dotsc ,\xi ^{n+1})^T\) we have \(\varvec{\xi } = {\mathbf {X}}_B\varvec{\theta }\), and we can write the pdf of the model as

where \(\psi (\varvec{\theta }) = \psi ^*({\mathbf {X}}_B \varvec{\theta }) = \sum _{a=1}^n \log f_a({\mathbf {X}}_B\varvec{\theta })\) is the potential function.

5.1.1 Maximum likelihood estimation

Next we will discuss details regarding maximum likelihood estimation of the model in equation (21). The log-likelihood is easily obtained from equation (21)

We will use the Holonomic Gradient Method to find the maximum likelihood estimate. Since the Hessian matrix of \(\ell (\varvec{\theta } \mid \mathbf {y})\) is easily obtained, we will use the Newton–Raphson method. Since \(\psi (\varvec{\theta }) = \psi ^*({\mathbf {X}}_B\varvec{\theta })\), we can use matrix calculus to obtain the Hessian and gradient of the log-likelihood function. Indeed, since the gradient is \(\frac{\partial \psi }{\partial \varvec{\theta }} = \frac{\partial \psi ^*}{\partial \varvec{\xi }} \cdot {\mathbf {X}}_B\) and the Hessian is \(\frac{\partial ^2 \psi }{\partial \varvec{\theta } \partial \varvec{\theta ^T}} = {\mathbf {X}}_B^T \cdot \frac{\partial ^2 \psi ^*}{\partial \varvec{\xi } \partial \varvec{\xi ^T}} \cdot {\mathbf {X}}_B\), we get the gradient and Hessian of the log-likelihood function as follows

The terms \( \frac{\partial \psi ^*}{\partial \varvec{\xi }}\) and \(\partial \varvec{\xi } \partial \varvec{\xi ^T}\) are respectively the gradient and Hessian of the potential function, which can be computed using the Pfaffian systems as in Example 2.

There are some numerical issues to consider when using the method outlined above for maximum likelihood estimation. Let \(\varvec{\theta }^{(k)}\) be the approximation of the maximum likelihood estimate at the kth iteration of the Newton–Raphson method. The next estimate is expressed as \(\varvec{\theta }^{(k+1)} = \varvec{\theta }^{(k)} + \varvec{\Delta }\), and the difference \(\varvec{\Delta }\) is obtained by solving the linear system

However, there are times where the Newton–Raphson method is “too violent”, and yields a \(\varvec{\Delta }\) of large magnitude, meaning that \(\varvec{\theta }^{(k)}\) and \(\varvec{\theta }^{(k+1)}\) are relatively far apart. This in turn increases the error in the holonomic update. Furthermore, there are cases where the Newton–Raphson method yields an iterate which does not belong to the model, i.e. when \(\theta ^{(k+1)}_{d+1} \ge 0\). In our implementation we solve the problem by shortening the step size \(\gamma \) when the Newton–Raphson method yields an estimate that is either too far from the previous estimate, or an estimate not belonging to the model.

5.1.2 Coordinate conversions

As described in Sect. 4, we have two sets of e-affine coordinates, \(\varvec{\xi }\) and \(\varvec{\theta }\), and m-affine coordinates, \(\varvec{\mu }\) and \(\varvec{\eta }\), along with their potential functions, respectively \(\psi ^*(\varvec{\xi })\), \(\psi (\varvec{\theta })\), \(\phi ^*(\varvec{\mu })\), and \(\phi (\varvec{\eta })\). The two sets of coordinates are related with

Let P be a point on the manifold (21), and assume the vectors \(\mathbf {Q}_a(P)\) are known. Given the \(\varvec{\xi }\) coordinates of P, we can recover its \(\varvec{\mu }\) coordinates from the Pfaffian systems in Example 2 since \(\mu _i = \frac{\partial \psi ^*}{\partial \xi ^i}\). If we expand the expressions in the Pfaffian, we obtain

We can also invert (24) to get the coordinate conversion from \(\varvec{\mu }\) to \(\varvec{\xi }\)

The conversion \(\varvec{\theta }\) to \(\varvec{\eta }\) is also simple, since we can just compose the transformations in (23) and (24), i.e. \(\varvec{\eta }(\varvec{\theta }) = {\mathbf {X}}_B^T \varvec{\mu }({\mathbf {X}}_B\varvec{\theta })\).

Next we will tackle mixed coordinate conversions. As in Sect. 4.1, let \(J \subseteq \{0,1,2,\dotsc , d+r\}\), \(\bar{J} = \{0,1,2,\dotsc , d+r\} \setminus J\) and let \(P = (\varvec{\eta }_J, \varvec{\theta }^{{\bar{J}}})\) denote a mixed coordinate. Additionally, assume that the value of the vector \(\mathbf {Q}_a\) is known at point P for all \(a = 1,2,\dotsc ,n\).

Newton’s method applied to the function \(\mathbf {F}\) in (13) will output \(\varvec{\eta }_{{\bar{J}}}\) and \(\varvec{\theta }^J\) at the same time, thus allowing us to recover the full \(\varvec{\theta }\) and \(\varvec{\eta }\) simultaneously. With the truncated normal distribution, using Newton’s method to convert mixed coordinates converges very quickly given a suitable initial guess. Depending on the situation where mixed coordinate conversion is needed, there are a few suitable initial guesses that work well. Mixed coordinate conversion is needed in three different situations in the algorithm described in Sect. 4.4:

-

1.

m-projections (steps 2, 4). Use the point before the projection as an initial guess.

-

2.

updating \(\mathbf {Q}_a\) (steps 2, 4, 5). Use the point before the update as the initial guess.

-

3.

the “wrap-up step” (step 5). Use the estimate \(\hat{\varvec{\theta }}_{(k)}\) of the current iteration as the initial guess.

We note again that there are cases where Newton’s method outputs a point \((\varvec{\theta }^J,\varvec{\eta }_{{\bar{J}}})^{(k+1)} = (\varvec{\theta }^J,\varvec{\eta }_{{\bar{J}}})^{(k)} + \varvec{\Delta }^{(k)}\) that does not belong to the model.Footnote 3 In our implementation, we simply iteratively half the step \(\varvec{\Delta }^{(k)}\) until the resulting point \((\varvec{\theta }^J, \varvec{\eta }_{{\bar{J}}})^{(k+1)}\) is satisfactory. For the truncated normal distribution, such a scaling of the Newton step is required if \(d+1 \in J\) and the element \((\theta ^{d+1})^{(k+1)}\) in \((\varvec{\theta }^J, \varvec{\eta }_{{\bar{J}}})^{(k+1)}\) becomes positive. More precisely, in this case the next iterate becomes

where \(\alpha = \Biggl \lceil -\frac{\log \left( -\frac{(\theta ^{d+1})^{(k)}}{(\Delta ^{d+1})^{(k)}}\right) }{\log 2} \Biggr \rceil \).

5.2 Weighted truncated normal distribution

For some fixed \(c > 0\), consider the distribution given by the unnormalized density function

where \(0\le y \le \infty \), and \(b < 0\). Its normalizing constant is given by

The annihilating ideal and Pfaffian system for f is derived in example 3.

For this example, writing closed form expressions for the coordinate conversions in terms of the \(f_a\) and \(\varvec{\xi }\) is difficult. We will circumvent this problem by making the vectors \(\mathbf {Q}_a\) larger so that \(\varvec{\xi }\) can be recovered from the \(\mathbf {Q}_a\) alone. As before, let \(f_a = \int _0^\infty y^c \exp (\xi ^a y + \xi ^{n+1} y^2) \, {\mathrm {d}}y\). We will write \(\partial _a f_a\) instead of \(\partial f_a/\partial \xi ^a\) to simplify notation.

Recall from example 3 that the annihilating ideal of \(f_a\) is \(\langle \partial _{\xi ^{n+1}} - \partial _{\xi ^a}^2, \frac{2{\xi ^{n+1}}}{c+1}\partial _{\xi ^a}^2 + \frac{{\xi ^a}}{c+1}\partial _{\xi ^a} + 1 \rangle \). By looking at the annihilating ideal of \(f_a\) we see that

Hence if we define \(\mathbf {Q}_a = (f_a, \partial _af_a, \partial ^2f_a, \partial ^3f_a)^T\), we can recover the full vector \(\varvec{\xi }\) from the \(\mathbf {Q}_a\) alone by solving the linear system above. Thus the vectors \(\mathbf {Q}_a\) allow the recovery of every coordinate: for example, the \(\varvec{\mu }\) coordinates are given by

Mixed coordinate conversion is also greatly simplified; there is no need to use Newton’s method. For example, assume we want to find the \(\varvec{\theta }\) coordinates corresponding to the mixed coordinate \(\varvec{\rho }\). It suffices to know the value of \(\mathbf {Q}_a\) at some other point with mixed coordinates \(\varvec{\rho }^{(\mathrm {old})}\). Then we can use the holonomic update to compute \(\mathbf {Q}_a\) at \(\varvec{\rho }\), and use the system above to recover \(\varvec{\xi }\), from which we can recover \(\varvec{\theta }\) by using least-squares.

5.3 Computational details

In order to not end up with nearly singular matrices in the algorithm, we will sometimes have to rescale both the design matrix and the response vector. We center and rescale each covariate such that the mean becomes 0 and the standard deviation becomes 1. In other words, if \(\mathbf {x}^i\) is the ith column of the design matrix \({\mathbf {X}}\), the scaling maps

where \(\overline{\mathbf {x}}^i = \frac{1}{n}\sum _{j=1}^n x^i_j\) is the mean, and \(\sigma ^i = \sqrt{\sum _{j=1}^n (x^i_j - \overline{\mathbf {x}}^i)^2 / (n-1)}\). Note that as in [10], scaling and centering and scaling the design matrix will not affect the result of the algorithm. In addition, we will scale the response vector \(\mathbf {y}\) such that the sample standard deviation equals 1

These scaling operations allow us to keep the orders of magnitude of the elements in the \(\varvec{\xi }\) and \(\varvec{\mu }\) coordinates roughly equal, which in turn make the orders of magnitude of the elements in the \(\varvec{\theta }\) and \(\varvec{\eta }\) coordinates roughly equal. This is often necessary when doing actual computations, since otherwise many operations involving mixed coordinates (for example the matrix \({\mathbf {{{\,\mathrm{Jac}\,}}}}(F)\) in Proposition 1) will end up nearly singular, with certain columns several orders of magnitude larger than others.

6 Results

6.1 Simulations

We test the algorithm by first looking at simulated datasets. In all of the simulations we will assume the weighted truncated normal distribution from Sect. 5.2 as the underlying distribution with \(c=0.5\).

First, we will consider a sample of \(n = 100\) observations and a design matrix with entries chosen uniformly at random from the range [0, 1]. We will give equal weight to every covariate with no intercept term: the true parameter vector \(\varvec{\theta }\) will be chosen such that \(\theta _1 = \cdots = \theta _d = 1/d\), \(\theta _0 = 0\), and \(\theta _{d+1} = -1\). With this setup, we expect that the ordering of the covariates depends only on the magnitude of the coefficient found in the maximum likelihood estimation step at the beginning. The results with varying d are shown in Fig. 1. The algorithm starts on the right of each plot, where the value of each parameter is equal to the maximum likelihood estimate of the full model. At each iteration, we compute the divergence of the current parameters compared to the empty model, and we plot the value of each parameter. As expected, in all cases the path towards zero of each covariate seems unaffected by other covariates.

Next we will introduce some correlation structures between the covariates. In Fig. 2 we have 100 samples with 5 covariates, where the first four columns of the design matrix are chosen uniformly at random from the range [0, 1]. The fifth column will be equal to \(\mathbf {X}_5 = \mathbf {X}_4 + \epsilon \), where \(\epsilon \sim N(0, \sigma ^2)\), and \(\sigma \) is allowed to vary. We will choose \(\varvec{\theta }\) such that each covariate has equal weight, as in the previous paragraph. We observe that as \(\sigma \) gets smaller, the paths for \(X_4\) and \(X_5\) become much more correlated, with a steeper slope than the other three covariates. Once either \(X_4\) or \(X_5\) gets eliminated, the slope becomes more like \(X_1,X_2,X_3\).

In Figs. 3 and 4 we simulate different kinds of correlation structures. For both examples, we still have \(n=100\) observations, and \(\theta = 1/d\) unless otherwise specified. In Fig. 3, we set \(\theta _1 = 0\) and \(X_5 = X_4 \cdot X_3\). We note that the covariate \(X_1\) is quickly eliminated since its value does not affect the observations at all.

In Fig. 4, we set covariates \(X_4\) and \(X_5\) equal to \(X_3\) plus Gaussian noise with \(\sigma = 0.05\). The covariates \(X_3, X_4, X_5\) contain redundant information, so \(X_4\) and \(X_5\) get eliminated early.

6.2 Real dataset

As a real life dataset, we will use a dataset on slump flow of concrete from [24]. The set has 103 data points with 7 input variables representing proportions of the following components in concrete: cement, blast furnace slag, fly ash, water, superplasticizer (SP), coarse aggregate and fine aggregate. The response variable is the slump flow of the concrete (in cm), which by definition cannot be negative. This motivates the use of the normal distribution truncated to only positive values. The resulting plot is shown in Fig. 5. The results in [24] assert that water, superplasticizer and fly ash have strong effects. This is in contrast to the results shown by our algorithm, which show that the three parameters with the most predictive power are water, fine aggregate and slag, and the effect of fly ash and superplasticizer is relatively weak. In [2], Antoniadis et al. studied different variable selection algorithms on the same concrete dataset. Their results also show that the effects of fly ash and superplasticizer in [24] have probably been overestimated, and their methods show that slag and water have the highest influence, with one method choosing fly ash as well. We compared the sum of square errors for these subsets in Table 1, and observe that for a subset of size 3, our ranking achieves the lowest SSE value.

7 Discussion

In this manuscript, we presented the holonomic extended LARS algorithm, and successfully implemented in R. The algorithm was tested on both simulated and real datasets, and the output was shown to be consistent with our expectations.

We conclude that the dually flat structure of the manifold of exponential families is still useful even when the potential function is not easy to compute. The HELARS implementation is slower than the ELARS implementation due to the overhead caused by keeping track of the \(\mathbf {Q}_a\) and constantly updating it using the holonomic gradient method. The benefits of using the holonomic gradient method are most visible when the potential function does not have a closed form expression. Then we can either find a Pfaffian system for the potential function by hand, as in our example, or use the theory of D-modules to construct the Pfaffian system from a holonomic ideal annihilating the potential function. Since in exponential families the potential function is the integral of an exponential function, finding the annihilating ideal is relatively easy in many cases. If the resulting ideal is holonomic, we can then use the integration algorithm [18] to get the annihilating ideal of the integral. In cases where the coordinate conversions are not obvious, such as the weighted normal distribution, we can exploit the annihilating ideal to derive relations between the derivatives which allow the recovery of coordinates from \(\mathbf {Q}_a\) alone. Since we keep track of \(\mathbf {Q}_a\) throughout the algorithm, this observation makes coordinate conversions trivial, but may make the vectors \(\mathbf {Q}_a\) larger than necessary.

In its current state, since the algorithm can only handle a certain class of generalized linear models using the canonical link function, a natural next step would be to extend it to an arbitrary generalized linear model.

Notes

A total order on monomials \(\partial ^\alpha \) where \(\partial ^\alpha \prec \partial ^\beta \) implies \(\partial ^\gamma \partial ^\alpha \prec \partial ^\gamma \partial ^\beta \).

after appropriate coordinate conversions.

In Newton’s method, \(\varvec{\Delta }^{(k)} = ({\mathbf{{{\,\mathrm{Jac}\,}}}}(F))^{-1} F\), where F is the same as in (13), and both F and \({\mathbf{{{\,\mathrm{Jac}\,}}}}(F)\) are evaluated at \((\theta ^J, \eta _{{\bar{J}}})^{(k)}\)

References

Agresti, A.: Foundations of Linear and Generalized Linear Models. Wiley Series in Probability and Statistics. Wiley, Hoboken (2015)

Antoniadis, A., Gijbels, I., Verhasselt, A.: Variable selection in additive models using p-splines. Technometrics 54(4), 425–438 (2012)

Ascher, U.M., Greif, C.: A first course in numerical methods. In: Computational Science and Engineering, vol. 7. Society for Industrial and Applied Mathematics (SIAM), Philadelphia (2011). https://doi.org/10.1137/1.9780898719987

Augugliaro, L., Mineo, A.M., Wit, E.C.: Differential geometric least angle regression: a differential geometric approach to sparse generalized linear models. J. R. Stat. Soc. Ser. B. Stat. Methodol. 75(3), 471–498 (2013). https://doi.org/10.1111/rssb.12000

Dormand, J.R., Prince, P.J.: A family of embedded Runge-Kutta formulae. J. Comput. Appl. Math. 6(1), 19–26 (1980). https://doi.org/10.1016/0771-050X(80)90013-3

Efron, B., Hastie, T., Johnstone, I., Tibshirani, R.: Least angle regression. Ann. Stat. 32(2), 407–499 (2004). https://doi.org/10.1214/009053604000000067

Härkönen, M.: Holonomic extended LARS R implementation. https://github.com/haerski/HELARS (2019)

Hayakawa, J., Takemura, A.: Estimation of exponential-polynomial distribution by holonomic gradient descent. Commun. Stat. Theory Methods 45(23), 6860–6882 (2016). https://doi.org/10.1080/03610926.2014.968735

Hibi, T. (ed.): Gröbner Bases: Statistics and Software Systems. Springer, Tokyo (2013). https://doi.org/10.1007/978-4-431-54574-3

Hirose, Y., Komaki, F.: An extension of least angle regression based on the information geometry of dually flat spaces. J. Comput. Graph. Stat. 19(4), 1007–1023 (2010). https://doi.org/10.1198/jcgs.2010.09064. Supplementary materials available online

Hirose, Y., Komaki, F.: Edge selection based on the geometry of dually flat spaces for Gaussian graphical models. Stat. Comput. 23(6), 793–800 (2013). https://doi.org/10.1007/s11222-012-9347-3

Hirose, Y., Komaki, F.: An estimation procedure for contingency table models based on nested geometry. J. Jpn. Stat. Soc. 45(1), 57–75 (2015). https://doi.org/10.14490/jjss.45.57

Hyvärinen, A.: Estimation of non-normalized statistical models by score matching. J. Mach. Learn. Res. 6, 695–709 (2005)

Koyama, T., Takemura, A.: Calculation of orthant probabilities by the holonomic gradient method. Jpn. J. Ind. Appl. Math. 32, 187–204 (2015). https://doi.org/10.1007/s13160-015-0166-8

McCullagh, P., Nelder, J.A.: Generalized linear models. Monographs on Statistics and Applied Probability. Chapman and Hall, London (1989). https://doi.org/10.1007/978-1-4899-3242-6. Second edition [of MR0727836]

Minka, T.: Old and new matrix algebra useful for statistics (1997). https://www.microsoft.com/en-us/research/publication/old-new-matrix-algebra-useful-statistics/

Nakayama, H., Nishiyama, K., Noro, M., Ohara, K., Sei, T., Takayama, N., Takemura, A.: Holonomic gradient descent and its application to the Fisher–Bingham integral. Adv. Appl. Math. 47(3), 639–658 (2011). https://doi.org/10.1016/j.aam.2011.03.001

Oaku, T.: Algorithms for \(b\)-functions, restrictions, and algebraic local cohomology groups of \(D\)-modules. Adv. Appl. Math. 19(1), 61–105 (1997). https://doi.org/10.1006/aama.1997.0527

Park, M.Y., Hastie, T.: \(L_1\)-regularization path algorithm for generalized linear models. J. R. Stat. Soc. Ser. B Stat. Methodol. 69(4), 659–677 (2007). https://doi.org/10.1111/j.1467-9868.2007.00607.x

Parry, M., Dawid, A.P., Lauritzen, S.: Proper local scoring rules. Ann. Stat. 40(1), 561–592 (2012). https://doi.org/10.1214/12-AOS971

R Core Team: R: a language and environment for statistical computing. R Foundation for Statistical Computing, Vienna (2017). https://www.R-project.org/

Takayama, N., Koyama, T., Sei, T., Nakayama, H., Nishiyama, K.: hgm: holonomic gradient method and gradient descent (2017). https://CRAN.R-project.org/package=hgm. R package version 1.17

Tibshirani, R.: Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Ser. B (Methodol.) 58(1), 267–288 (1996). http://www.jstor.org/stable/2346178

Yeh, I.C.: Modeling slump flow of concrete using second-order regressions and artificial neural networks. Cement Concrete Composites 29(6), 474–480 (2007)

Yuan, M., Lin, Y.: Model selection and estimation in the Gaussian graphical model. Biometrika 94(1), 19–35 (2007). https://doi.org/10.1093/biomet/asm018

Yukawa, M., Amari, S.I.: \(\ell _p\)-regularized least squares \((0<p<1)\) and critical path. IEEE Trans. Inform. Theory 62(1), 488–502 (2016). https://doi.org/10.1109/TIT.2015.2501362

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was partly supported by JSPS KAKENHI Grant Numbers JP17K00044, JP18K18008, JST CREST Grant Number JPMJCR1763, and the Vilho, Yrjö and Kalle Väisälä Foundation.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Härkönen, M., Sei, T. & Hirose, Y. Holonomic extended least angle regression. Info. Geo. 3, 149–181 (2020). https://doi.org/10.1007/s41884-020-00035-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41884-020-00035-1