Abstract

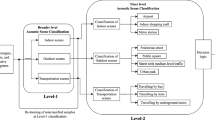

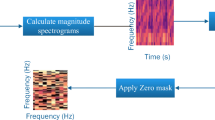

Acoustic Scene Classification (ASC) aims to categorize real-world audio into one of the predetermined classes that identifies the recording environment of the audio. State-of-the-art ASC algorithms have excellent performance in terms of accuracy due to the emergence of deep learning algorithms. In particular, Convolutional Neural Networks (CNN) have set a new benchmark in ASC due to their promising performance. Despite the emergence of new frameworks, the interest in ASC is growing progressively with a shift of focus from enhancing accuracy to reducing model complexity. In this work, we introduce the AtResNet, a residual atrous CNN for low complexity acoustic scene classification. The AtResNet utilizes dilated convolutions and residual connections to reduce the number of model parameters. To further enhance the performance of AtResNet, we introduce a multi-scale feature representation method called multi-scale mel spectrogram (ms2). To compute the ms2, we evaluate the mel spectrogram on the wavelet subbands of the signal. We assessed AtResNet with ms2 on three benchmark datasets in ASC. The results suggest that our method significantly outperformed the CNN-based techniques in addition to a baseline system based on log mel spectrum for signal representation. AtResNet offers a 28.73% reduction in the model parameters against a baseline CNN. Furthermore, the AtResNet has a model size of 81 KB with post-training quantization of network weights. It makes AtResNet suitable for deployment in context-aware devices.

Similar content being viewed by others

Data Availability Statement

The authors confirm that the audio data (referred to as DCASE18, DCASE19, and DCASE20 in the manuscript) that support the findings of this study are available in Zenodo with the identifiers https://doi.org/10.5281/zenodo.1228142, https://doi.org/10.5281/zenodo.2589280, and https://doi.org/10.5281/zenodo.3819968.

References

G. Beylkin, R. Coifman, V. Rokhlin, Fast wavelet transforms and numerical algorithms I. Commun. Pure Appl. Math. 44(2), 141–183 (1991). https://doi.org/10.1002/cpa.3160440202

V. Bisot, S. Essid, G. Richard, in 23rd European Signal Processing Conference, EUSIPCO 2015 (IEEE, Nice, France, 2015), pp. 719–723. https://doi.org/10.1109/EUSIPCO.2015.7362477

H. Chen, Z. Liu, Z. Liu, P. Zhang, Long-term scalogram integrated with an iterative data augmentation scheme for acoustic scene classification. J. Acoust. Soc. Am. 149(6), 4198–4213 (2021). https://doi.org/10.1121/10.0005202

W.H. Choi, S.I. Kim, M.S. Keum, W. Han, H. Ko, D.K. Han, in 2011 IEEE International Conference on Consumer Electronics (ICCE) (2011), pp. 627–628. https://doi.org/10.1109/ICCE.2011.5722777

F. Chollet, et al. Keras (2015). https://github.com/fchollet/keras

S. Chu, S. Narayanan, C.J. Kuo, M.J. Mataric, in Proceedings of the 2006 IEEE International Conference on Multimedia and Expo (2006), pp. 885–888. https://doi.org/10.1109/ICME.2006.262661

B. Clarkson, N. Sawhney, A. Pentl, in Proceedings of the International Workshop on Perceptual User Interfaces, 1998 (San Francisco, USA, 1998), pp. 4–6

I. Daubechies, Ten Lectures on Wavelets (Society for Industrial and Applied Mathematics, USA, 1992)

DCASE. Dcase2019 challenge, in IEEE AASP challenge on detection and classification of acoustic scenes and events. http://dcase.community/challenge2019/ (2019). Accessed: 26 June 2021

K. El-Maleh, A. Samouelian, P. Kabal, in Proceedings of the 1999 IEEE International Conference on Acoustics, Speech, and Signal Processing. Proceedings. ICASSP99 (Cat. No.99CH36258), vol. 1 (1999), pp. 237–240. https://doi.org/10.1109/ICASSP.1999.758106

C.B. et al. SoX: Sound eXchange. http://sox.sourceforge.net/sox.html

P. Gaunard, C.G. Mubikangiey, C. Couvreur, V. Fontaine, in Proceedings of the 1998 IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP ’98, May 12-15, 1998 (IEEE, Seattle, Washington, USA,, 1998), pp. 3609–3612. https://doi.org/10.1109/ICASSP.1998.679661

J.T. Geiger, B. Schuller, G. Rigoll, in 2013 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (2013), pp. 1–4. https://doi.org/10.1109/WASPAA.2013.6701857

P. Guyot, J. Pinquier, R. André-Obrecht, in Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP 2013 (IEEE, Vancouver, BC, Canada, 2013), pp. 793–797. https://doi.org/10.1109/ICASSP.2013.6637757

Y. Han, J. Park, in Proceedings of the Detection and Classification of Acoustic Scenes and Events 2017 Workshop (DCASE2017) (2017), pp. 46–50

A. Harma, M. McKinney, J. Skowronek, in 2005 IEEE International Conference on Multimedia and Expo (2005), pp. 634–637. https://doi.org/10.1109/ICME.2005.1521503

T. Heittola, A. Mesaros, T. Virtanen, in Proceedings of the Detection and Classification of Acoustic Scenes and Events 2020 Workshop (DCASE2020) (2020). arXiv:2005.14623. Submitted

H. Hu, C.H. Yang, X. Xia, X. Bai, X. Tang, Y. Wang, S. Niu, L. Chai, J. Li, H. Zhu, F. Bao, Y. Zhao, S.M. Siniscalchi, Y. Wang, J. Du, C. Lee, Device-robust acoustic scene classification based on two-stage categorization and data augmentation. CoRR (2020). arXiv:2007.08389

S. Hyeji, P. Jihwan, Acoustic Scene Classification Using Various Pre-processed Features and Convolutional Neural Networks. Technical reports, DCASE2019 Challenge (2019)

H. Jiang, J. Bai, S. Zhang, B. Xu, in 2005 International Conference on Natural Language Processing and Knowledge Engineering (2005), pp. 131–136. https://doi.org/10.1109/NLPKE.2005.1598721

H. Jleed, M. Bouchard, in 2017 IEEE 30th Canadian Conference on Electrical and Computer Engineering (CCECE) (2017), pp. 1–4. https://doi.org/10.1109/CCECE.2017.7946646

A. Karoui, R. Vaillancourt, Families of biorthogonal wavelets. Comput. Math. Appl. 28(4), 25–39 (1994). https://doi.org/10.1016/0898-1221(94)00124-3

B. Kim, S. Yang, J. Kim, S. Chang, in Proceedings of the Detection and Classification of Acoustic Scenes and Events 2021 Workshop (DCASE2021) (Barcelona, Spain, 2021), pp. 21–25

C. Landone, J. Harrop, J. Reiss, in Proceedings of the 8th International Conference on Music Information Retrieval, ISMIR 2007, ed. by S. Dixon, D. Bainbridge, R. Typke (Austrian Computer Society, Vienna, Austria, 2007), pp. 159–160. http://ismir2007.ismir.net/proceedings/ISMIR2007_p159_landone.pdf

Y. Lee, S. Lim, I.Y. Kwak, CNN-based acoustic scene classification system. Electronics 10(4), 371 (2021). https://doi.org/10.3390/electronics10040371

Y. Li, X. Li, Y. Zhang, W. Wang, M. Liu, X. Feng, Acoustic scene classification using deep audio feature and BLSTM network, in Proceedings of the 2018 International Conference on Audio, Language and Image Processing (ICALIP), pp. 371–374 (2018)

T.Y. Lin, P. Goyal, R. Girshick, K. He, P. Dollár, in 2017 IEEE International Conference on Computer Vision (ICCV) (2017), pp. 2999–3007. https://doi.org/10.1109/ICCV.2017.324

L. Ma, B. Milner, D. Smith, Acoustic environment classification. ACM Trans. Speech Lang. Process. 3(2), 1–22 (2006). https://doi.org/10.1145/1149290.1149292

S. Mallat, A theory for multiresolution signal decomposition: the wavelet representation. IEEE Trans. Pattern Anal. Mach. Intell. 11(7), 674–693 (1989). https://doi.org/10.1109/34.192463

I. McLoughlin, Z. Xie, Y. Song, H. Phan, R. Palaniappan, Time-frequency feature fusion for noise robust audio event classification. Circuits Syst. Signal Process. 39, 1672–1687 (2020). https://doi.org/10.1007/s00034-019-01203-0

A. Mesaros, T. Heittola, T. Virtanen, in Proceedings of the Detection and Classification of Acoustic Scenes and Events 2018 Workshop (DCASE2018) (2018), pp. 9–13. https://arxiv.org/abs/1807.09840

N. Moritz, J. Schröder, S. Goetze, J. Anemüller, B. Kollmeier, Acoustic Scene Classification Using Time-Delay Neural Networks and Amplitude Modulation Filter Bank Features. Technical reports, DCASE2016 Challenge (2016)

R. Nandi, S. Shekhar, M. Mulimani, in Proceedings of the Interspeech 2021 (2021), pp. 561–565. https://doi.org/10.21437/Interspeech.2021-656

S. Park, S. Mun, Y. Lee, H. Ko, in Proceedings of the Detection and Classification of Acoustic Scenes and Events 2017 Workshop (DCASE2017) (2017), pp. 98–102

L. Pham, A. Schindler, H. Tang, T. Hoang, DCASE 2021 task 1A. Technique report, DCASE2021 Challenge (2021)

S.S.R. Phaye, E. Benetos, Y. Wang, in ICASSP 2019–2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (2019), pp. 825–829. https://doi.org/10.1109/ICASSP.2019.8683288

M. Plata, Deep Neural Networks with Supported Clusters Pre-classification Procedure for Acoustic Scene Recognition. Technical report, DCASE2019 Challenge (2019)

R. Radhakrishnan, A. Divakaran, A. Smaragdis, in IEEE Workshop on Applications of Signal Processing to Audio and Acoustics, 2005. (2005), pp. 158–161. https://doi.org/10.1109/ASPAA.2005.1540194

P. Ramachandran, B. Zoph, Q.V. Le, Swish: a Self-Gated Activation Function (2017). arXiv:1710.05941

J. Salamon, J.P. Bello, Deep convolutional neural networks and data augmentation for environmental sound classification. IEEE Signal Process. Lett. 24(3), 279–283 (2017). https://doi.org/10.1109/lsp.2017.2657381

N. Sawhney, P. Maes, Situational awareness from environmental sounds (1998)

R. Serizel, V. Bisot, S. Essid, G. Richard, in 2016 IEEE International Conference on Image Processing (ICIP) (2016), pp. 948–952. https://doi.org/10.1109/ICIP.2016.7532497

J. Sharma, O. Granmo, M. Goodwin, in Interspeech 2020, 21st Annual Conference of the International Speech Communication Association, Virtual Event, ed. by H. Meng, B. Xu, T.F. Zheng (ISCA, Shanghai, China, 2020), pp. 1186–1190. https://doi.org/10.21437/Interspeech.2020-1303

P.P. Vaidyanathan, Multirate Systems and Filter Banks (Prentice-Hall Inc, Hoboken, 1993)

T. Virtanen, M.D. Plumbley, D. Ellis, Introduction to Sound Scene and Event Analysis (Springer International Publishing, Cham, 2018), pp. 3–12. https://doi.org/10.1007/978-3-319-63450-0_1

D. Wang, G. Brown, Computational Auditory Scene Analysis: Principles, Algorithms, and Applications (Wiley, Hoboken, 2006)

H. Wang, Y. Zou, W. Wang, Specaugment++: A hidden space data augmentation method for acoustic scene classification. CoRR (2021). arXiv:2103.16858

F. Yu, V. Koltun, in 4th International Conference on Learning Representations, ICLR 2016, San Juan, Puerto Rico, May 2-4, 2016, Conference Track Proceedings, ed. by Y. Bengio, Y. LeCun (2016). arXiv:1511.07122

H. Zhang, M. Cisse, Y.N. Dauphin, D. Lopez-Paz, in International Conference on Learning Representations (2018). https://openreview.net/forum?id=r1Ddp1-Rb

L. Zhang, J. Han, Z. Shi, in Interspeech 2020, 21st Annual Conference of the International Speech Communication Association, Virtual Event, Shanghai, China, 25-29 October 2020, ed. by H. Meng, B. Xu, T.F. Zheng (ISCA, 2020), pp. 1181–1185. https://doi.org/10.21437/Interspeech.2020-1151

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no known competing financial, general and institutional interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Madhu, A., Suresh, K. AtResNet: Residual Atrous CNN with Multi-scale Feature Representation for Low Complexity Acoustic Scene Classification. Circuits Syst Signal Process 41, 7035–7056 (2022). https://doi.org/10.1007/s00034-022-02107-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-022-02107-2