Pankaj Bhambri, Harpreet Kaur, Akarshit Gupta and Jaskaran Singh

Harpreet Kaur, Akarshit Gupta and Jaskaran Singh

Department of Information Technology, Guru Nanak Dev Engineering College, Ludhiana

Corresponding Author E-mail: pkbhambri@gmail.com

DOI : http://dx.doi.org/10.13005/ojcst13.0203.05

Article Publishing History

Article Received on : 09 Aug 2020

Article Accepted on : 26 Oct 2020

Article Published : 30 Dec 2020

Plagiarism Check: Yes

Reviewed by: Dr Ranjan Maity

Second Review by: Dr. Parag Verma

Article Metrics

ABSTRACT:

Modern human activity recognAition systems are mainly trained and used upon video stream and images data that understand the features and actions variations in the data having similar or related movements. Human Activity Recognition plays a significant role in human-to-human and human-computer interaction. Manually driven system are highly time consuming and costlier. In this project, we aim at designing a cost-effective and faster Human Activity Recognition System which can process both video and image in order to recognize the activity being performed in it, thereby aiding the end user in various applications like surveillance, aiding purpose etc. This system will not only be cost effective but also as a utility-based system that can be incorporated in a large number of applications that will save time and aid in various activities that require recognition process, and save a lot of time with good accuracy Also, it will aid the blind people in availing the knowledge of their surroundings.

KEYWORDS:

Deep Learning; Neural Network

Copy the following to cite this article:

Bhambri P, Kaur H, Gupta A, Singh J. Human Activity Recognition System. Orient.J. Comp. Sci. and Technol; 13(2,3).

|

Copy the following to cite this URL:

Bhambri P, Kaur H, Gupta A, Singh J. Human Activity Recognition System. Orient.J. Comp. Sci. and Technol; 13(2,3). Available from: https://bit.ly/2LkUNop

|

Introduction

This Project uses video and images dataset for the recognition of the human activity in the assigned dataset. The solution to the problem is availed via neural network architecture. This architecture uses resnet-34 pre-trained on kinetics dataset for processing the videos and it is further refined using the transfer learning on more concentrated activities while using the caption generation technique on the images. It builds a sentence describing the activity in the image dataset named “flickr8k”, in which cnn-rnn, lstm is used to extract features from image, and text data of caption is used to learn vocabulary to formulate the sentence.

In this project, we intend to design a cost-effective and faster Human Activity Recognition System that can process both video and picture to identify the activity being conducted in it, thus assisting end-users in various applications such as surveillance, helping purpose, etc. This system will not only be cost-effective but also as a utility-based system that can be integrated into a wide variety of applications that can save time and support in different activities that need recognition process and save a lot of time with good accuracy. It will also support blind people to realize what is happening around them by integrating this system into a handheld device.

Objectives

The main objectives of the design of the Human Activity

Recognition (HAR) System are:

To understand different models and techniques of Human

Activity Recognition Systems based on research papers.

To recognize various Human Activities from video or image

data.

To provide a cost-effective and faster Human Activity

Recognition system.

To provide with a HAR program that can be incorporated into a

variety of different real-time applications like surveillance, aiding blind

people etc.

To automate the process of Activity recognition from video

stream or an image.

Problem Formulation

There has been an ever-rising need for improving the methods involved in Human and Computer Interaction and how computer understands human actions and activities. There is a large population of elderly and blind people out in the world, problem was to help them understand and interact more with their environment and aid them in various activities which led to the problem formulation of human activity recognition system, which can be used in applications beyond aiding elderly and blind, i.e. in video surveillance etc.

Existing System

The existing system was manual where a person had to sit in front of a monitor to monitor and guide human activities, it was hectic, time consuming and costlier system and was prone to human errors and negligence. Further some systems started using sensor data to recognize human activities but they were needed to be worn by the user which limited the scope of activity recognition in open environment in general.

Proposed System

Unlike the existing system, the proposed system takes input in the form of video and image to recognize the activity being performed in it. It is much faster and a cost-effective solution. It uses deep learning to recognize the activities. This system can be used, incorporated or expanded further to cover a wide range of applications. Hence, it acts as a base system for various applications and tasks. Moreover, it can reduce the need of additional staff for entering data. Thereby, reducing the cost of the companies considerably.

Unique Features of the System

The unique

features of the Human Activity Recognition system are listed as follows:

The system

recognizes activity from both video and image data.

It is very

easy to use and understand.

It is sort

of a base system which can be used in various number of applications.

It uses

cnn-rnn, lstm and resnet architecture to solve the solution.

It works with a great accuracy on videos dataset to recognize activity in the video. It generates sentence describing the activity on the image dataset with decent accuracy

Methodology

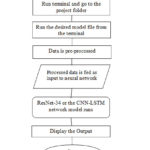

A flowchart is a diagram that represents an algorithm, workflow or process. The flowchart shows the steps of various kinds and their order by connecting them with arrows [8]. In the Human Activity Recognition System, data is first passed through the pre-processing function which makes it suitable to fed as input to the neural network, the data then goes through the network which then gives us the output.

User Interface Representation

User interface simulation is a programming methodology utilized by computer device programmers. Today’s user interfaces (UIs) are dynamic software elements that play a crucial role in the accessibility of an application. The creation of the UI thus includes not only guidelines and best practice studies, but also a method of growth, including the production of graphic templates and a structured terminology for this visualization [25]. The PyCharm IDE GUI style is used, which is intuitive and user-friendly to suit the habits of everyday usage. The principle is very formal and widely recognized; such that they stress what kind of action the consumer will take.

Results and Discussions

The Human Activity Recognition System gives activity prediction with good accuracy on videos and with decent accuracy on images. Below are some snap shot of training code, done on colab:

Conclusion and Future Scope

Since computer vision is a trending topic in these days, systems like Human Activity Recognition systems is quite useful and effective for solving a variety of application, whether it would be surveillance or monitoring, or aiding the elderly and blind people etc. This not only provide additional comfort to the end-users but also can be deployed into different Organizations in order to reduce the employ workload. The model shows good results on video streams while performing decently on image data. Activity Recognition system are of great importance in modern days due to the convenience and problems which the system offers and solves. Need of Activity recognition for monitoring and surveillance, video segmentation etc is of growing demand in which this system can greatly help. This system can be incorporated in mobiles apps to further aid the elderly and blind people. It is cost-effective and an immense time saving system which is also prone to human errors. This system acts as a base solution for many other applications involving activity recognition. Hence, this system is very beneficial for both individual and organizations for general or specialize purposes.

This project has a tremendous scope in future. Firstly, video recognition code can be further fined tuned using transfer learning and much bigger datasets can be used to further increase the accuracy of the model. Moreover, web and mobile apps can be built which can call these python scripts via an API call to provide activity recognition on users mobile, and can also aid the elderly and blind people to understand and interact with their surroundings etc and many more real-time applications of activity recognition system.

Acknowledgement

We are very thankful to Dr. Sehijpal Singh, Principal, Guru Nanak Dev Engineering College (GNDEC), Ludhiana, for providing this opportunity to carry out big project research in college labs, which have some of the facilities required for the effective execution of our project. The continuous advice and motivation obtained from Dr. Kiran Jyoti, H.O.D., IT Department, GNDEC Ludhiana, has been of tremendous help in carrying out the project work and is warmly thankful. We would like to convey our sincere gratitude and thank profusely our Project Leader, Dr. Pankaj Bhambri and Project Boss, Prof. Harpreet Kaur, without whose wise advice and encouragement it would have been difficult to complete the project in this way. We would like to express our appreciation to the other faculty members of the Information Technology Department of GNDEC for their academic assistance in this research. Finally, we owe it to all those who have contributed to this report to work both directly and indirectly.

References

- Activity recognition-Wikipedia,” [Online]. Available: https://en.wikipedia.org/wiki/Activity_recognition.

- “Human Activity Recognition,” [Online]. Available: https://www.frontiersin.org/articles/10.3389/frobt.2015.00028/full.

- “Research-Wikipedia,” [Online]. Available: https://en.wikipedia.org/wiki/ Research.

- “Software Requirements,” [Online]. Available: https://www.tutorialspoint.com/software_engineering/software_requirements.htm.

- R. Y. Lee, “Software Engineering: A Hands-On Approach,” [Online]. Available: https://books.google.co.in/books?id=zdBEAAAAQBAJ &printsec=frontcover&dq=Software+Engineering:+A+Hands- On+Approach +By+Roger+Y.+Lee&hl =en&sa=X&ved= 0ahUKEwj59Pjlt8nmAhXdzTgGHQALDhwQ6AEIKTAA.

- “Intoduction ro Software Engineering/Architecture/Design-Wikipedia,” [Online]. Available: https://en.wikibooks.org /wiki/Introduction_to_Software_Engineering/A rchitecture/Design.

- S.K. Panda, G.S.M. Reddy, S.B.Goyal, T.K., P. Bhambri, M.V. Rao, A.S. Singh, A.H. Fakih, P.K. Shukla, P.K. Shukla, A.B. Gadicha, and C.J. Shelke, “Method for Management of Scholarship of Large Number of Students based on Blockchain”, Indian Patent issue 36/2019, application 201911034937, (2019), September 06.

- “Flowchart-Wikipedia,” [Online]. Available: https://en.wikipedia.org/ wik i/Flowchart.

- “Data-Flow Diagrams-Wikipedia,” [Online]. Available: https://en.wikipedia.org/ wiki/Data-flow_diagram.

- “Residual Neural Network-Wikipedia,” [Online]. Available: https://en.wikipedia.org/wiki/Residual_neural_network.

- “Overview of ResNets,” [Online]. Available: https://towardsdatascience.com /an-overview-of-resnet-and-its-variants-5281e2f56035.

- “CNN-Wikipedia,” [Online]. Available: https://en.wikipedia.org/wiki/Convolutional_neural_network.

- “Understanding LSTMs,” [Online]. Available: https://colah.github.io/posts/2015-08-Understanding-LSTMs/.

- “Project Implementation,” [Online]. Available: https://sswm.info/humanitarian-crises/urban-settings/planning-process-tools/implementatio n-tools/project-implementation.

- J. Kaur, P.Bhambri and K. Sharma, “Wheat Production Analysis based on Native Bayes Classifier”, IJAEMA, vol. 11, no. 9, (2019), pp. 705-709.

- “Python Features,” [Online]. Available: https://www.javatpoint.com/python-features.

- “Adavantages and Disadvantages of Python,” [Online]. Available: https://medium.com/@mindfiresolutions.usa/advantages-and-disadvantages-of-python-programming-language-fd0b394f2121.

- “numpy-Wikipedia,” [Online]. Available: https://en.wikipedia.org/wiki/NumPy.

- P.Bhambri and O.P. Gupta, “Analyzing Induction Attributes of Decision Tree”, IJCTR, vol. 1, no. 7, (2013), pp. 166-169.

- “Keras-Wikipedia,” [Online]. Available: https://en.wikipedia.org/wiki /Keras.

- “Matplotlib-Wikipedia,” [Online]. Available: https://en.wikipedia.org/wiki/Matplotlib.

- W. CHU, K.T. LEE, W. LUO, P. Bhambri, and S. Kautish, “Predicting the Security Threats of Internet Rumors and Spread of False Information Based On Sociological Principle”, Computer Standards & Interfaces, (2020), ISSN 0920-5489, https://doi.org/10.1016/j.csi.2020.103454.

CrossRef - “PEP8,” [Online]. Available: https://pragmaticcoders.com/blog/pep8-and-why-is-it-important/.

- “Software Testing Overview,” [Online]. Available: https://www.tutorialspoint.com/software _engineering/software_testing_overview.htm.

- “User Interface Modelling,” [Online]. Available: https://en.wikipedia.org/wiki/User_interface_modeling.

This work is licensed under a Creative Commons Attribution 4.0 International License.

Harpreet Kaur, Akarshit Gupta and Jaskaran Singh

Harpreet Kaur, Akarshit Gupta and Jaskaran Singh