Abstract

New research is being published at a rate, at which it is infeasible for many scholars to read and assess everything possibly relevant to their work. In pursuit of a remedy, efforts towards automated processing of publications, like semantic modelling of papers to facilitate their digital handling, and the development of information filtering systems, are an active area of research. In this paper, we investigate the benefits of semantically modelling citation contexts for the purpose of citation recommendation. For this, we develop semantic models of citation contexts based on entities and claim structures. To assess the effectiveness and conceptual soundness of our models, we perform a large offline evaluation on several data sets and furthermore conduct a user study. Our findings show that the models can outperform a non-semantic baseline model and do, indeed, capture the kind of information they’re conceptualized for.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Citations are a central building block of scholarly discourse. They are the means by which scholars relate their research to existing work—be it by backing up claims, criticising, naming examples, or engaging in any other form. Citing in a meaningful way requires an author to be aware of publications relevant to their work. Here, the ever increasing rate of new research being published poses a serious challenge. With the goal of supporting researchers in their choice of what to read and cite, approaches to paper recommendation and citation recommendation have been an active area of research for some time now [2].

In this paper, we focus on the task of context-aware citation recommendation (see e.g. [7, 10, 11, 14]). That is, recommending publications for the use of citation within a specific, confined context (e.g. one sentence)—as opposed to global citation recommendation and paper recommendation, where publications are recommended with respect to whole documents or user profiles. Within context-aware citation recommendation, we specifically investigate the explicit semantic modelling of citation contexts. While implicit semantic information (such as what is captured by word embeddings) greatly benefits scenarios like keyword search, we argue that the specificity of information needs in academia—e.g. finding publications that use a certain data set or address a specific problem—require a more rigidly modelled knowledge representations, such as those proposed in [21] or [8]. Regarding quality, such knowledge representations (e.g. machine readable annotations of scientific publications) would ideally be created manually by the researchers themselves (see [13]). However, neither have such ideals become the norm in academic writing so far, nor are large scale data sets with manually created annotations available. Thus, we create semantic models of citation contexts using NLP techniques to automatically derive such knowledge representations. Using our models we investigate if and when such novel representation formats are beneficial for context-aware citation recommendation.

Overall, we make the following contributions:

-

1.

We propose novel ways of deriving semantically-structured representations from citation contexts, based on entities and claims, intended for context-aware citation recommendation. To the best of our knowledge, this is the first approach of its kind, as previous uses of semantically-structured representations for citation recommendation were only ever applied to whole papers (i.e. in a setting where richer information including authors, list of references, venue, etc. is available).

-

2.

We perform a large-scale offline evaluation using four data sets, in which we test the effectiveness of our models.

-

3.

We also perform a user study to further evaluate the performance of our models and assess their conceptual soundness.

-

4.

We make the code for our models and details of our evaluation publicly available.Footnote 1

The rest of the paper is structured as follows: In Sect. 2 we outline existing works on citation recommendation. We then describe in Sect. 3 the novel semantic approaches to citation recommendation. Sect. 4 is dedicated for the evaluation of our approaches. We conclude in Sect. 5.

2 Related Work

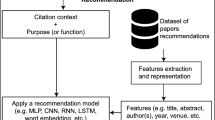

Citation recommendation can be classified into global citation recommendation and context-aware (sometimes also referred to as “local”) citation recommendation [10]. Various approaches have been published in both areas, but there is, to the best of our knowledge, not one that both (a) is context-aware and (b) uses explicit semantic representations of citation contexts. We illustrate this in Table 1. In the following, we therfore outline the most related works on (semantic) global citation recommendation (upper right cell in Table 1), and (non-semantic) context-aware citation recommendation (lower left cell in Table 1).

Global Citation Recommendation. Global citation recommendation is characterized as a task for which the input of the recommendation engine is not a specific citation context but a whole paper. Various approaches have been published for global citation recommendation, some of which can also be used for paper recommendation, i.e., for recommending papers for the purpose of reading [1]. A few semantic approaches to global citation recommendation exist (see Table 2). They are based on a semantically-structured representation of papers’ metadata (e.g., authors, title, abstract) [28, 29] and/or papers’ contents [19]. Note that the approaches proposed in this paper are not using any of the papers’ metadata or full text, as our goal is to provide fine-grained, semantically suitable recommendations for specific citation contexts.

Context-Aware Citation Recommendation. Context-aware citation recommendation approaches recommend publications for a specific citation context and are thus also called “local” citation recommendation approaches. Existing context-aware citation recommendation approaches soly rely on lexical and syntactic features (n-grams, part-of-speech tags, word embeddings etc.) but do not attempt to model citation contexts in an explicit semantic fashion. Table 3 gives an overview of context-aware citation recommendation approaches. We can mention SemCir [29] as the only approach we are aware of that could be regarded as a semantic approach to context-aware citation recommendation. The explicit semantic representations are, however, not generated from citation contexts (not context-aware), but from papers (global), that are textually (not necessarily semantically) similar to the citation contexts. We therefore categorize it as a semantic global approach.

3 Approach

To ensure wide coverage and applicability of our citation context models, we base our selection of structures to model on a typology of citation functions from the field of citation context analysis ([22], built upon [26]). Note that, because citation context analysis is primarily concerned with the intent of the author rather than the content of the citation context, we cannot use the functions as a basis for our models directly. Instead we inspect sample contexts of each function type and thereby identify named entity (NE) and claim as semantic structures of interest, as illustrated in Table 4. Note that the example contexts listed to have no structure (“-” in the Structure column) may contain named entities and claims as well (e.g. “DBLP” or “Lamers et al. base their definition of the author’s name”), but these are (in the case of NEs) not representative of the cited work or (in the case of claims) just statements about a publication rather than statements being backed by the cited work.

The following sections will describe our entity-based and claim-based models for context-aware citation recommendation.

3.1 Entity-Based Recommendation

The intuition behind an entity-based approach is that there exists a reference publication for a named entity mentioned in the citation context. For instance, this can be a data set (“CiteSeerx [37]”), a tool (“Neural ParsCit [23]”), or a (scientific) concept (“Semantic Web [37]”). In a more loose sense this can also include publications being referred to as examples (“approaches to context-aware citation recommendation [5,6,7, 10,11,12, 14, 16]”). Because names of methods, data sets, tools, etc. in academia often are neologisms and only the most widely used ones of them are reflected in resources like DBpedia, we use a set of noun phrases found in academic publications as surrogates for named entities (instead of performing entity linking). For this, we extract noun phrases from the arXiv publications provided by [24] and filter out items that appear only once. In doing so we end up with a set of 2,835,929 noun phrasesFootnote 2 (NPs) that we use.

In the following, we define two NP-based representations of citation contexts, \({R_{\text {NP}}}\) and \({R_{\text {NPmrk}}^{2+}}\). For this, \(\mathcal {P}\) shall denote our set of NPs and c shall denote a citation context.

\({\varvec{R}}_{\mathbf {NP}}\). We define \(R_{\text {NP}}(c)\) as the set of maximally long NPs contained in c. Formally, \(R_{\text {NP}}(c) = \{t|t\text { appears in }c \wedge t\in \mathcal {P} \wedge t^{+pre} \notin \mathcal {P}\wedge t^{+suc} \notin \mathcal {P}\}\) where \(t^{+pre}\) and \(t^{+suc}\) denote an extension of t using its preceding or succeeding word respectively. A context “This has been done for language model training [37]”, for example, would therefore have “language model training” in its representation, but not “language model”.

\({\varvec{R}}_{\mathbf {NPmrk}}^{\mathbf {2+}}\). We define \({R_{\text {NPmrk}}^{2+}(c)}\) as a subset of \({R_{\text {NP}}(c)}\) containing, if present, the NP of minimum word length 2 directly preceding the citation marker which a recommendation is to be made for. Formally, \(R_{\text {NPmrk}}^{2+}(c) = \{t|t\in R_{\text {NP}}(c)\wedge len(t)\ge 2 \wedge t\text { directly precedes } m\}\) where m is the citation marker in c that a prediction is to be made for.

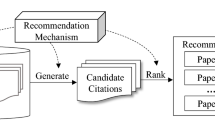

Recommendation. As is typical in context-aware citation recommendation [5, 6, 10] we aggregate citation contexts referencing a publication to describe it as a recommendation candidate. To that end, we define frequency vector representations for single citation contexts and documents as follows. A citation context vector is \(V(R(c)) = (t_{1}, t_{2}, ..., t_{|\mathcal {P}|})\), where \(t_{i}\) denotes how often the ith term in \(\mathcal {P}\) appears in R(c). A document vector then is a sum of citation context vectors \(\sum \limits _{c \in \varrho (d)} V(R(c))\), where \(\varrho (d)\) denotes the set of citation contexts referencing d. Similarities can then be calculated as the cosine of context and document vectors.

3.2 Claim-Based Recommendation

Our claim-based approach is motivated by the fact that citations are used to back up claims (see Table 4). These can, for example, be findings presented in a specific publication (“It has been shown, that ... [37].”) or more general themes found across multiple works (“... is still an unsolved task [37–39].”).

For the extraction of claims, we considered a total of four state of the art [30] information extraction tools (PredPatt [27], Open IE 5.0 [17], ClausIE [4] and Ollie [18]) and found PredPatt to give the best quality resultsFootnote 3. For the simple sentence “The paper shows that context-based methods can outperform global approaches.”, Listing 1.1 shows the user interface output of PredPatt and Fig. 1 its internal representation using Universal Dependencies (UD) [20].

Because the predicates and especially arguments in the PredPatt user interface output can get very long—e.g. “can outperform” (including the auxiliary verb “can”) and “context-based methods can outperform global approaches” (unlikely to appear in another citation context with the exact same wording)—we build our claim-based representation \(R_{\text {claim}}\) from UD trees, as explained in the following section.

\({\varvec{R}}_{\mathbf {claim}}\). For each claim that PredPatt detects, it internally builds one UD tree. To construct our claim-based representation \(R_{\text {claim}}\), we traverse each tree, identify the predicate and its arguments (subject and object) and save these in tuples. The exact procedure for this is given in Algorithm 1. If a sentence uses a copula (be, am, is, are, was), the actual predicate is a child node of the root with the relation type “cop”. This is resolved at marker (a). For the identification of useful arguments (markers (b\(_\mathbf {1}\)) and (b\(_\mathbf {2}\)) in Algorithm 1), we look at all nouns within the UD tree and resolve compounds (“compound”, “mwe”, “name” relations), phrases split by formatting (“goeswith”), conjunctions (“conj”) as well as adjectival and adverbial modifiers (“amod”, “advmod”). To give an example for this, the noun “methods” in both trees in Fig. 1 has the adjectival modifier “context-based”. In such a case our model would not choose “methods” as an argument to “outperform” but “context-based methods”. Listing 1.2 shows the complete representation generated for the example sentence.

Recommendation. For a set of predicate-argument tuples \(\mathcal {T}\), we define frequency vector representations of citation contexts and documents as follows. A citation context vector is \(V(R(c)) = (t_{1}, t_{2}, ..., t_{|\mathcal {T}|})\), where \(t_{i}\) denotes how often the ith tuple in \(\mathcal {T}\) appears in R(c). A document vector, again, is a sum of citation context vectors \(\sum \limits _{c \in \varrho (d)} V(R(c))\), where \(\varrho (d)\) is the set of citation contexts referencing d. Similarities are then calculated as the cosine of TF-IDF weighted context and document vectors.

\({\varvec{R}}_{\mathbf {claim+BoW}}\). In addition to \(R_{\text {claim}}\), we define a combined model \(R_{\text {claim+BoW}}\) as a linear combination of similarity values given by \(R_{\text {claim}}\) and an bag-of-words model (BoW). Similarities in the combined model are calculated as \(sim(A,B)=\sum \limits _{m\in \mathcal {M}}\alpha _msim_m(A,B)\) of the models \(\mathcal {M}=\{R_{\text {claim}},\text {BoW}\}\) with the coefficients \(\alpha _{R_{\text {claim}}}=1\) and \(\alpha _{\text {BoW}}=2\).

4 Evaluation

We evaluate our models in a large offline evaluation as well as a user study. In total, we compare four models—\({R_{\text {NP}}}\), \({R_{\text {NPmrk}}^{2+}}\), \(R_{\text {claim}}\) and \(R_{\text {claim+BoW}}\)—against a bag-of-words baseline (BoW). Our choice of a simple BoW model for a baseline is motivated as follows. Because our entity-based and claim-based models are, in their current form, string based, they can be seen as a semantically informed selection of words from the citation context. In this sense, they work akin to what is done in reference scope identification [15]. To evaluate the validity of the selections of words that our models lay focus on, we use the complete set of words contained in the context (BoW) to compare against. Comparing our models against deep learning based approaches (e.g. based on embeddings) would not provide a comparison in this selection behavior, and are therefore was not considered.

4.1 Offline Evaluation

Our offline evaluation is performed in a citation re-prediction setting. That is, we take existing citation contexts from scientific publications and split them into training and test subsets. The training contexts are used to learn the representations of the cited documents. The test contexts are stripped of their citations, used as input to our recommender systems and the resulting recommendations checked against the original citations.

Table 5 shows the four data sources we use as well as applied filter criteria. RefSeer and ACL-ARC are often used in related work (e.g. [5, 7]), we therefore use both of them and two additional large data sets to ensure a thorough evaluation. Table 6 gives an overview of key properties of the training and test data for the evaluation. We split our data according to the citing paper’s publication date and report #Candidate docs: the number of candidate documents to rank for a recommendation; #Test set items: the number of test set items (unit: citation contexts); Mean CC/RC: the mean number of citation contexts per recommendation candidate in the training set (i.e., a measure for how well the recommendation candidates are described, giving insight into how difficult the recommendation task for each of the data sets is).

Figure 2 shows the results of our evaluation. We measure NDCG, MAP, MRR and Recall at cut-offs from 1 to 10. Note that the evaluation using the arXiv data differs from the other cases in two aspects. First, it is the only case where we can apply \({R_{\text {NPmrk}}^{2+}}\), because citation marker positions are given. Second, because for citation contexts with several citations (cf. Table 4, “Exemplification”) the data set lists several cited documents (instead of just a single one), we are able to treat more than a single re-predicted citation as valid. We do this by counting re-predicted “co-citations” as relevant when calculating MAP scores and give them a relevance of 0.5 in the NDCG calculation. This also means that, looking at higher cut-offs, NDCG and MAP values can decrease because ideal recommendations require relevant re-predictions on all ranks above the cut-off.

As for the performance of our models shown in Fig. 2, we see that for each of the data sets \(R_{\text {claim+BoW}}\) outperforms the BoW baseline in each metric and for all cut-off values.Footnote 4 \(R_{\text {claim}}\) and \({R_{\text {NP}}}\) do not compare in performance with the two aforementioned. This suggests that the claim structures we model with \(R_{\text {claim}}\) are not enough for well performing recommendations on their own, but do capture important information that non-semantic models (BoW) miss. \({R_{\text {NPmrk}}^{2+}}\), only present in the arXiv evaluation, gives particularly good results for lower cut-offs and performs especially well in the MRR metric. It performs the worst at high cut-offs measured by NDCG. Note that \({R_{\text {NPmrk}}^{2+}}\) is only evaluated for test set items, where the model was applicable (i.e. where a noun phrase of minimum length 2 is directly preceding the citation marker; cf. Sect. 3.1). For our evaluation this was the case for 100,308 out of the 490,018 test set items (20.5%). The evaluation results for the citation marker-aware model \({R_{\text {NPmrk}}^{2+}}\) indicate that it is comparatively well suited to recommend citations where there is one particularly fitting publication (e.g. a reference paper) and less suited for exemplifications (cf. Table 4).

4.2 User Study

To obtain more insights into the nature of our evaluation data, as well as a better understanding of our models, we perform a user study in which two human raters (the two authors) judge input-output pairs of our offline evaluation (i.e. citation contexts and the recommendations given for them). For this, we randomly choose 100 citation contexts from the arXiv evaluation, so that we can include \({R_{\text {NPmrk}}^{2+}}\). For each input context, we show raters the top 5 recommendations of the 3 best performing models of the offline evaluation, i.e., BoW, \(R_{\text {claim+BoW}}\) and \({R_{\text {NPmrk}}^{2+}}\) models (resulting in \(100\times 5\times 3=1500\) items). Judgments are performed by looking at each citation context and the respective recommended paper. In addition, we let the raters judge the type of citation (Claim, NE, Exemplification, Other; cf. Table 4).

Table 7 shows the results based on the raters’ relevance judgments. We present measurements for all contexts, as well as each of the citation classes on its own. We note that \(R_{\text {claim+BoW}}\) and BoW are close, but in contrast to the offline evaluation, \(R_{\text {claim+BoW}}\) only outperforms BoW in the Recall metric. In the case of NE type citations, the \({R_{\text {NPmrk}}^{2+}}\) model performs better than the other two models in all metrics. Furthermore, we can see that both \(R_{\text {claim+BoW}}\) and \({R_{\text {NPmrk}}^{2+}}\) achieve their best results for the type of citation they’re designed for—Claim and NE respectively. This indicates that both models actually capture the kind of information they’re conceptualized for. Compared to the offline evaluation, we measure higher numbers overall. While the user study is of considerably smaller scale and a direct comparison therefore not necessarily possible, the notably higher numbers indicate, that a re-prediction setting involves a non-negligible number of false negatives (actually relevant recommendations counted as not relevant).

4.3 Main Findings

The entity-based model \({R_{\text {NPmrk}}^{2+}}\), which captures noun phrases preceding the citation marker, performs best at low cut-offs and in the MRR metric. Low cut-offs and measuring the MRR can be interpreted as emulating citations for reference publications. This interpretation is also backed by the results of the user study, where \({R_{\text {NPmrk}}^{2+}}\) outperformed all other models when recommending for citation contexts that referenced a named entity or concept. We therefore conclude that \({R_{\text {NPmrk}}^{2+}}\) is well suited for recommending such types of citations. Our claim-based model \(R_{\text {claim}}\) does not compare in performance to a BoW baseline, but \(R_{\text {claim+BoW}}\) outperforms aforementioned. We take this as an indication that the claim representation encodes important information which the non-semantic BoW model is not able to capture. In the user study \(R_{\text {claim+BoW}}\) performs best for citation contexts, in which a claim is backed by the target citation. This suggests that the model indeed captures information related to claim structures.

5 Conclusion

In the field of context-aware citation recommendation, the explicit semantic modeling of citation contexts is not well explored yet. In order to investigate the merit of such approaches, we developed semantic models of citation contexts based on entities as well as claim structures. We then evaluated our models on several data sets in a citation re-prediction setting and furthermore conducted a user study. In doing so, we could demonstrate their applicability and conceptual soundness. The next step from hereon is to move from semantically informed text-based models to explicit knowledge representations. Our research also shows, that differentiating between different semantic representations of citation contexts due to varying ways of citing information is reasonable. Developing different citation recommendation approaches, depending on the semantic citation types, might therefore be a promising next step in our research.

Notes

- 1.

- 2.

See https://github.com/IllDepence/ecir2020 for a full list.

- 3.

See https://github.com/IllDepence/ecir2020 for details on the evaluation.

- 4.

To validate our findings, we further analyze the NDCG@5 results and note a statistically significant improvement for the arXiv, MAG and RefSeer data but no significant difference for the ACL-ARC data set.

References

Beel, J., Dinesh, S.: Real-world recommender systems for academia: the pain and gain in building, operating, and researching them. In: Proceedings of the Fifth Workshop on Bibliometric-enhanced Information Retrieval (BIR) co-located with the 39th European Conference on Information Retrieval (ECIR 2017), pp. 6–17 (2017)

Beel, J., Gipp, B., Langer, S., Breitinger, C.: Research-paper recommender systems: a literature survey. Int. J. Digit. Libr. 17(4), 305–338 (2015). https://doi.org/10.1007/s00799-015-0156-0

Bird, S., et al.: The ACL anthology reference corpus: a reference dataset for bibliographic research in computational linguistics. In: Proceedings of the 6th International Conference on Language Resources and Evaluation, LREC 2008 (2008)

Corro, L.D., Gemulla, R.: ClausIE: clause-based open information extraction. In: Proceedings of the 22nd International World Wide Web Conference, WWW 2013, pp. 355–366 (2013)

Duma, D., Klein, E.: Citation resolution: a method for evaluating context-based citation recommendation systems. In: Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics, ACL 2014, pp. 358–363 (2014)

Duma, D., Klein, E., Liakata, M., Ravenscroft, J., Clare, A.: Rhetorical classification of anchor text for citation recommendation. D-Lib Mag. 22 (2016). https://doi.org/10.1045/september2016-duma. http://www.dlib.org/dlib/september16/09contents.html

Ebesu, T., Fang, Y.: Neural citation network for context-aware citation recommendation. In: Proceedings of the 40th International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 1093–1096 (2017)

Gábor, K., Buscaldi, D., Schumann, A., QasemiZadeh, B., Zargayouna, H., Charnois, T.: SemEval-2018 task 7: semantic relation extraction and classification in scientific papers. In: Proceedings of the 12th International Workshop on Semantic Evaluation, SemEval@NAACL-HLT 2018, pp. 679–688 (2018)

He, Q., Kifer, D., Pei, J., Mitra, P., Giles, C.L.: Citation recommendation without author supervision. In: Proceedings of the Forth International Conference on Web Search and Web Data Mining, WSDM 2011, pp. 755–764 (2011)

He, Q., Pei, J., Kifer, D., Mitra, P., Giles, L.: Context-aware citation recommendation. In: Proceedings of the 19th International Conference on World Wide Web, WWW 2010, pp. 421–430. ACM, New York (2010)

Huang, W., Wu, Z., Liang, C., Mitra, P., Giles, C.L.: A neural probabilistic model for context based citation recommendation. In: Proceedings of the Twenty-Ninth AAAI Conference on Artificial Intelligence, AAAI 2015, pp. 2404–2410 (2015)

Huang, W., Wu, Z., Mitra, P., Giles, C.L.: Refseer: a citation recommendation system. In: Proceedings of the IEEE/ACM Joint Conference on Digital Libraries, JCDL 2014, pp. 371–374 (2014)

Jaradeh, M.Y., et al.: Open research knowledge graph: next generation infrastructure for semantic scholarly knowledge. In: Proceedings of the 10th International Conference on Knowledge Capture, K-CAP 2019, pp. 243–246 (2019)

Jeong, C., Jang, S., Shin, H., Park, E., Choi, S.: A Context-Aware Citation Recommendation Model with BERT and Graph Convolutional Networks (2019). http://arxiv.org/abs/1903.06464

Jha, R., Jbara, A.A., Qazvinian, V., Radev, D.R.: NLP-driven citation analysis for scientometrics. Nat. Lang. Eng. 23(1), 93–130 (2017)

Kobayashi, Y., Shimbo, M., Matsumoto, Y.: Citation recommendation using distributed representation of discourse facets in scientific articles. In: Proceedings of the 18th ACM/IEEE on Joint Conference on Digital Libraries, JCDL 2018, pp. 243–251 (2018)

Mausam, M.: Open information extraction systems and downstream applications. In: Proceedings of the Twenty-Fifth International Joint Conference on Artificial Intelligence, IJCAI 2016, pp. 4074–4077 (2016)

Schmitz, M., Bart, R., Soderland, S., Etzioni, O.: Open language learning for information extraction. In: Proceedings of the 2012 Joint Conference on Empirical Methods in Natural Language Processing and Computational Natural Language Learning, EMNLP-CoNLL 2012, Stroudsburg, USA, pp. 523–534 (2012)

Middleton, S.E., Roure, D.D., Shadbolt, N.: Capturing knowledge of user preferences: ontologies in recommender systems. In: Proceedings of the First International Conference on Knowledge Capture, K-CAP 2001, pp. 100–107 (2001)

Nivre, J., et al.: Universal dependencies v1: a multilingual treebank collection. In: Proceedings of the Tenth International Conference on Language Resources and Evaluation, LREC 2016, pp. 1659–1666 (2016)

Peroni, S., Shotton, D.M.: FaBiO and CiTO: ontologies for describing bibliographic resources and citations. J. Web Semant. 17, 33–43 (2012)

Petrić, B.: Rhetorical functions of citations in high- and low-rated master’s theses. J. Engl. Acad. Purp. 6(3), 238–253 (2007)

Prasad, A., Kaur, M., Kan, M.Y.: Neural ParsCit: a deep learning based reference string parser. Int. J. Digit. Libr. 19, 323–337 (2018). https://doi.org/10.1007/s00799-018-0242-1

Saier, T., Färber, M.: Bibliometric-enhanced arXiv: a data set for paper-based and citation-based tasks. In: Proceedings of the 8th International Workshop on Bibliometric-enhanced Information Retrieval, BIR 2019, pp. 14–26 (2019)

Sinha, A., et al.: An overview of Microsoft academic service (MAS) and applications. In: Proceedings of the 24th International Conference on World Wide Web, WWW 2015, pp. 243–246 (2015)

Thompson, P.: A pedagogically-motivated corpus-based examination of PhD theses: macrostructure, citation practices and uses of modal verbs. Ph.D. thesis, University of Reading (2001)

White, A.S., et al.: Universal decompositional semantics on universal dependencies. In: Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, EMNLP 2016, pp. 1713–1723 (2016)

Zarrinkalam, F., Kahani, M.: A multi-criteria hybrid citation recommendation system based on linked data. In: Proceedings of the 2nd International eConference on Computer and Knowledge Engineering, ICCKE 2012, pp. 283–288 (2012)

Zarrinkalam, F., Kahani, M.: SemCiR: a citation recommendation system based on a novel semantic distance measure. Program: Electron. Libr. Inf. Syst. 47, 92–112 (2013)

Zhang, S., Rudinger, R., Durme, B.V.: An evaluation of PredPatt and open IE via stage 1 semantic role labeling. In: Proceedings of the 12th International Conference on Computational Semantics, IWCS 2017 (2017)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Saier, T., Färber, M. (2020). Semantic Modelling of Citation Contexts for Context-Aware Citation Recommendation. In: Jose, J., et al. Advances in Information Retrieval. ECIR 2020. Lecture Notes in Computer Science(), vol 12035. Springer, Cham. https://doi.org/10.1007/978-3-030-45439-5_15

Download citation

DOI: https://doi.org/10.1007/978-3-030-45439-5_15

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-45438-8

Online ISBN: 978-3-030-45439-5

eBook Packages: Computer ScienceComputer Science (R0)